AWS unveils agentic AI for faster ML ops and models

If AI news hits faster than your coffee kicks in, you’re not wrong. Today’s AWS roundup is a real fast lane: new AI agents in SageMaker Unified Studio, multimodal reasoning models you can deploy, and agent tools that make cloud apps actually do stuff.

Translation: less yak shaving, more shipping.

You’ve seen the hype. This is the “we can push it by Friday” part. One-click agent onboarding, notebooks wired to your workflow, models that handle text, images, and logic, and guardrailed autonomy. If you’ve been stuck in POC purgatory, this is your exit ramp.

And because it’s AWS, it plugs into your data, your IAM, your VPC. You keep security and scale, and finally get real speed.

Quick vibe check: this isn’t sci‑fi; it’s the boring but powerful plumbing teams need—standard tools, managed endpoints, and knobs for governance. Less “let’s glue seven SaaS tools together,” more “we can build the workflow we want,” without starting from scratch each time.

Think of it like moving from chatty copilots to helpful coworkers. They read your docs, follow your rules, call your APIs, and log every move. If your org cares about compliance and repeatability, this is when the cool demo grows up into a real app.

TL;DR

- New: one‑click AI agents and notebooks in SageMaker Unified Studio

- Multimodal reasoning models in Bedrock for richer, grounded outputs

- Agentic AI upgrades: more autonomous, context-aware cloud apps

- Faster ML ops: fewer handoffs, quicker experiments to production

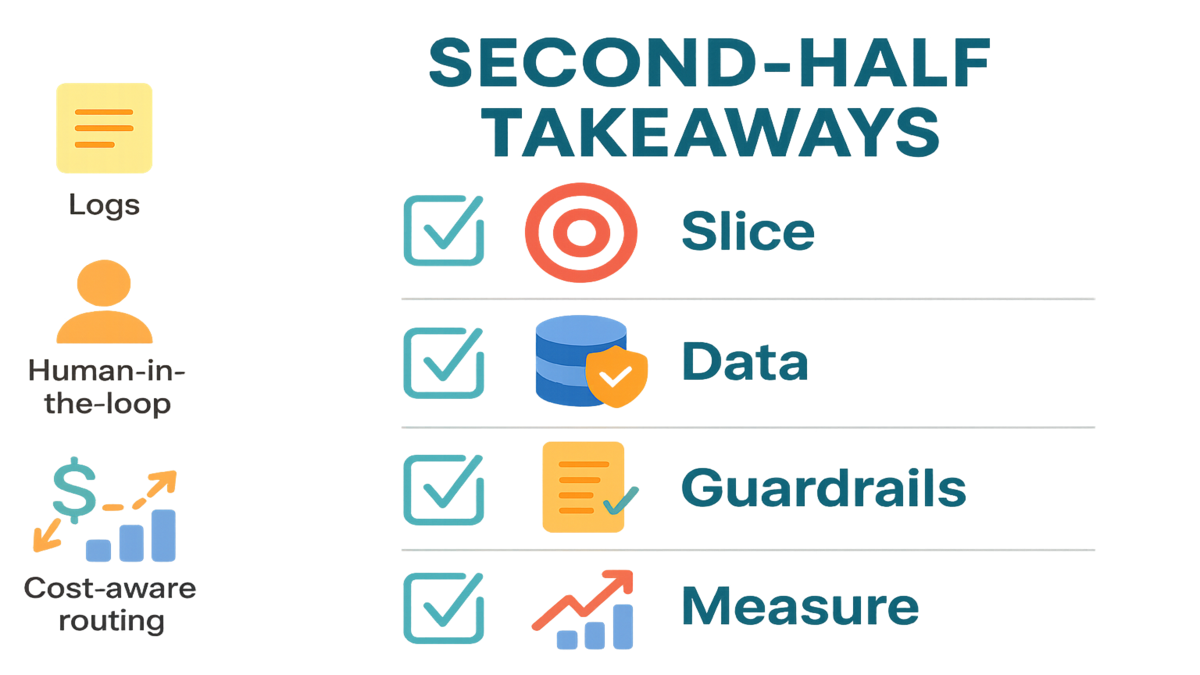

- Start small: pick a thin slice, wire data, ship safely

What actually dropped

One click agents in Studio

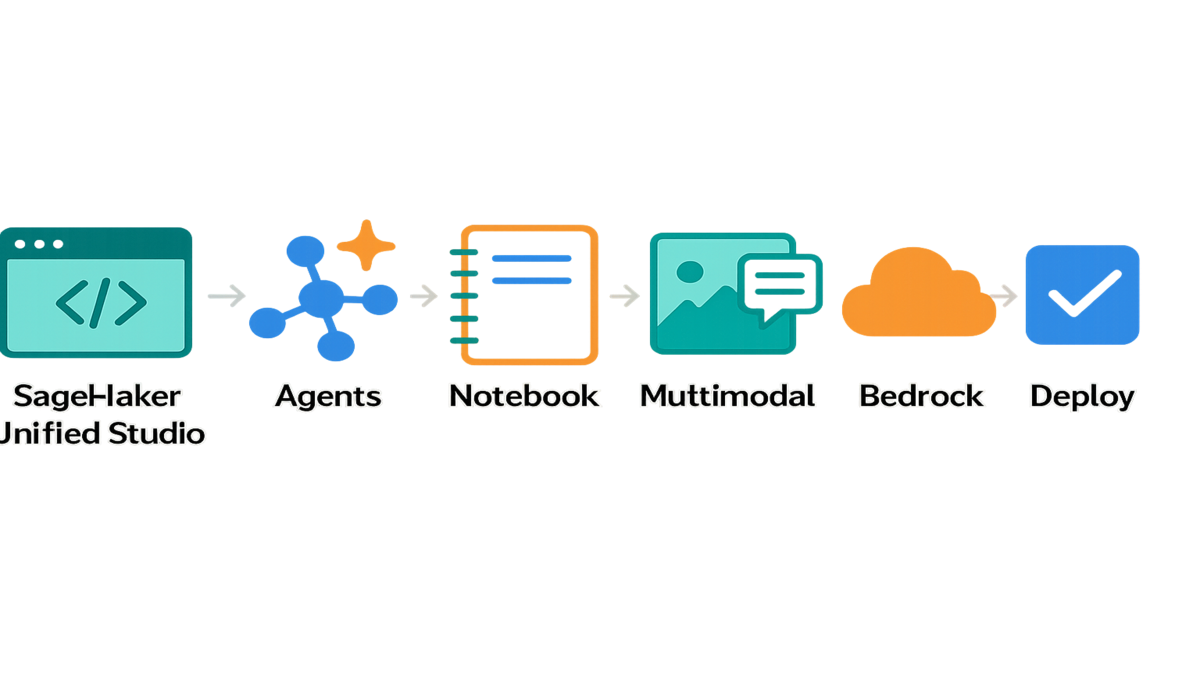

AWS pushed new AI agents inside Amazon SageMaker Unified Studio with one‑click onboarding and notebooks baked in. That means you can spin up an agent, connect to your data, iterate in a managed notebook, and test end‑to‑end without hopping tools.

Why this matters: less setup, fewer permissions rabbit holes, faster loop from idea to deploy. You’re not babysitting infrastructure; you’re iterating on behavior, prompts, and tools.

Expert note: AWS describes SageMaker as a place to “prepare data, build, train, and deploy machine learning models” in one environment (Amazon SageMaker Studio docs). The Unified Studio update centers that same promise around agent workflows—dev-friendly, production-minded.

Here’s what that looks like in practice:

- Spin up an agent workspace, which pre‑wires IAM roles and policies so you’re not chasing permissions for every test.

- Attach a managed notebook to tinker with prompts, tool definitions, and evaluation checks right next to your agent config.

- Use built‑in connectors and SDKs to talk to internal APIs or data sources without duct‑taping credentials into your code.

- Run an end‑to‑end trial, capture logs, tweak, repeat. When it’s solid, promote to a governed environment.

Result: the “first working loop” moves from weeks of coordination to hours of focused iteration—in your account, under your guardrails.

Multimodal reasoning models in reach

The roundup also adds multimodal reasoning to the stack—models that understand text plus images (and can ground responses with tools). On Amazon Bedrock, you get a menu of top-tier providers with managed endpoints, so you can test, swap, and scale without juggling bespoke infra.

As AWS puts it, Bedrock is “a fully managed service that offers a choice of high-performing foundation models” and tooling to build generative apps (Amazon Bedrock docs). Multimodal reasoning keeps your outputs contextual—and useful.

Why you care:

- Users don’t send perfect text prompts; they share screenshots, scans, PDFs, and photos. Multimodal models meet them where they are.

- Grounded answers: pair text+image understanding with retrieval so the output can cite relevant docs—great for audits.

- Optionality: Bedrock’s model menu lets you trade off latency, cost, and capability per use case, not per platform decision.

Agentic tooling for real autonomy

The agent enhancements unlock context-aware, multi-step flows that call APIs, query knowledge bases, and log decisions. In AWS’s own words, Agents for Amazon Bedrock help you “build generative AI applications that take actions” (AWS News Blog). That’s the shift from chat to choreograph—workflows that move tickets, reconcile systems, and close the loop.

Under the hood, this typically involves:

- Defining tools/APIs (often via schemas) that the agent can call

- Routing steps based on the conversation state and retrieved knowledge

- Guardrails that validate inputs/outputs and restrict which tools the agent can use

- Rich logging so every decision and action is traceable later

The net: fewer swivel-chair moments, more work done where your data already lives.

Why this matters now

Cost of slow ML ops

Slow ML ops kills momentum. Every extra approval, environment mismatch, and handoff adds friction. The new SageMaker agents plus notebooks cut that down. You get faster prototyping in a secure, governed stack—so you can actually put models in users’ hands.

Real talk: the only metric that matters early is the time from “idea” to “first end‑to‑end test.” Unified Studio and one‑click agent onboarding chop that clock.

Zooming out: speed compounds when the stack is consistent. Fewer bespoke environments means less drift, fewer “works on my laptop” surprises, and faster rollbacks when something misbehaves.

Multimodal ups your ceiling

Most users don’t think in text only. They paste screenshots, forms, PDFs, photos. Multimodal reasoning lets you parse and act on all of it. Think: claims intake from images, quality checks from photos, invoice parsing from scans, and grounded answers that cite sources.

Quote to remember: “A managed choice of foundation models” means you can pick the one that fits latency, price, and capability instead of force-fitting a hammer (Amazon Bedrock docs). That de-risks your early bets.

Bonus: as providers improve models, you can switch with less code churn. Keep your business logic steady; swap the model when the cost/latency curve gets better.

Agentic fewer swivel chairs

Agentic AI shifts from answers to actions: look up a record, enrich it, validate a rule, call a service, update a ticket. Less copy‑paste, fewer browser tabs, and more completed tasks.

Example scenario: a support agent has an AI sidekick that summarizes a customer issue, checks recent orders, tests eligibility, drafts a response, and updates Salesforce—while logging steps. Same people, more finished work.

Another: finance ops reviews an invoice image, matches it to a PO, flags exceptions, and proposes journal entries. Humans approve; the agent posts and documents the trail.

Wire it into your stack

Step 1 Pick thin slice

Forget moonshots. Choose a small, painful, measurable workflow—like triaging emails, summarizing PDFs, or updating a case. Define “done” as something that ships in a week.

Pro move: write a user story with an acceptance test. If the agent can pass it end‑to‑end, you’re allowed to scale.

Helpful template:

- As a [role], I want [task] so I can [outcome].

- Given [input], when [action], then [observable result with metric].

- Non‑negotiables: latency target, approval points, and audit requirements.

Step 2 Bring your data

Point the agent at the minimal data it needs. Use AWS identity and VPC controls so nothing leaks. If you’re on Bedrock, layer in Knowledge Bases to ground answers and reduce hallucinations.

Quote worth noting: Agents on Bedrock are designed to “take actions,” which includes calling your APIs and tools with guardrails (AWS News Blog). That’s where real ROI lives—inside your systems, not just in a chat box.

Security checklist:

- Use scoped IAM roles and least privilege for any tool calls

- Keep traffic private with VPC endpoints where available

- Encrypt data at rest and in transit; segment PII

- Log everything to CloudWatch and keep trails in CloudTrail for audits

Step 3 Iterate in notebooks

Use the Unified Studio notebook to test prompts, tools, and evaluation checks fast. Once behavior stabilizes, codify guardrails: input validation, output schemas, and explicit tool permissions. Move from play to prod with change control, not vibes.

Hardening steps:

- Freeze prompts and tool schemas behind version control

- Add output parsing and schema validation

- Define safe defaults and clear error messages

- Bake in red teaming prompts for edge cases before launch

Step 4 Measure product

Track time saved, error rates, and deflection. If your thin slice hits goals, expand. If not, adjust the tool stack or user interface before you scale. Agents are features, not magic—treat them that way.

Looking for a measurement layer to centralize evals and reporting as you scale? Explore Requery.

Metrics that matter:

- Cycle time: idea to first end‑to‑end

- User impact: tasks completed per hour, deflection rate, SLA hits

- Quality: factuality vs. a gold set, exception rate, rework

- Cost: model spend per task, downstream savings

What this unlocks next

New patterns worth exploring

- Retrieval + multimodal: ground answers with docs and images, then cite sources.

- Workflow decomposition: chain smaller agents that each own a clear step.

- Human-in-the-loop: the agent proposes; your expert approves at the right moment.

- Cost-aware routing: pick models dynamically by task complexity and SLA.

Tactical example: a claims intake flow that reads an image of a form, extracts data, checks policy eligibility via API, drafts a decision, and routes exceptions to humans—with citations for every step.

Traps that slow teams down

- POC graveyard: shipping demos with no plan for data, IAM, or observability.

- Tool sprawl: every team picks a different model and stack; nobody standardizes.

- No evals: you can’t improve what you don’t measure—add golden datasets early.

- Over-automation: don’t let agents act without auditable logs and rollbacks.

A simple antidote: standardize on a small set of models and evaluation metrics in Bedrock, publish a “thin slice” playbook, and require an audit log before any write-back to systems of record.

A final lens

Bedrock’s managed models plus SageMaker’s unified workflow give you optionality without chaos. Start narrow, add safety, measure impact, and keep swapping components as the “latest AI technology news” rolls. That’s how you ride the wave without faceplanting.

Quick pulse check before scale

- Your thin slice is small, painful, and measurable.

- The agent only sees the data it needs—inside your VPC and IAM guardrails.

- You tested prompts/tools in a SageMaker notebook with eval checks.

- You log actions, ground outputs, and require approval where it counts.

- You track time saved and error rates; expansion is tied to results.

FAQs your sharp questions

Headline from today’s AI announcements

AWS introduced new AI agents with one‑click onboarding and notebook support in Amazon SageMaker Unified Studio, integrated multimodal reasoning models on Bedrock, and enhanced agentic tooling for more autonomous, context-aware cloud apps.

Differ from a typical chatbot

Chatbots answer. Agents act. With Bedrock agents, you can define tools/APIs, ground responses with your data, and orchestrate multi‑step tasks with audit trails—so the system completes workflows, not just conversations.

Pick one model provider

No. Bedrock is model‑agnostic. You can select from multiple providers and switch as needs change (capability, latency, cost). That reduces lock‑in and lets you ride model improvements without rewriting everything.

Prevent hallucinations

Ground responses with vetted sources (e.g., Knowledge Bases on Bedrock), constrain tools, validate outputs against schemas, and add human approval for high‑risk steps. Keep your prompts tight and measure with golden datasets.

Security and compliance

You run inside AWS accounts with IAM, VPC, and logging. Use scoped roles, least‑privilege tool access, and encryption at rest/in transit. Keep PII handling explicit, and attach change control to agent behaviors before production.

How fast to get value

Pick a thin slice and aim for a one‑week end‑to‑end pilot. With one‑click agent onboarding and notebooks in Unified Studio, you can design, test, and demo a production‑shaped workflow quickly—then harden it.

Need a data science team

No. You need a product owner, an engineer who can define tools/APIs, and someone to set acceptance tests. The managed services hide a lot of ML plumbing so you can focus on the workflow.

Keep costs in check

Start with small payloads, cache retrieval results, and route tasks to cheaper models when possible. If you need consistent throughput, consider provisioned capacity options where supported. Always measure cost per completed task, not per token.

Handle PII and sensitive data

Mask sensitive fields, segregate datasets, and enforce least privilege on tool calls. Keep private traffic in your VPC where supported and use field‑level logging controls. Only store what you must, and keep audit trails tight.

What if latency matters

Split your flow: a fast model for triage, a deeper model for tricky cases. Precompute retrieval chunks, keep context windows small, and push heavy post‑processing to asynchronous steps when users don’t need it instantly.

Ship your first AWS agent

- Pick one workflow with clear success criteria and an owner.

- In SageMaker Unified Studio, create an agent and attach a notebook.

- Connect minimal data and tools; enforce IAM and VPC isolation.

- Evaluate with a small golden set; add grounding and output schemas.

- Log actions, require approvals where needed, then pilot with 5 users.

- Track time saved and error rates; iterate, then expand the slice.

Here’s the bottom line: if you’ve been waiting for a practical on‑ramp, this is it. The best generative AI announcements aren’t the flashiest—they lower setup time, reduce risk, and shorten your idea‑to‑impact loop. Today’s AWS updates tilt the game your way: unified agent workflows, multimodal reasoning that plugs into your stack, and agentic primitives built for production. Start small, measure honestly, and go where the data says. The compounding comes from shipping.

Want proof from the field? Browse our Case Studies.

References

- Amazon SageMaker Studio overview

- Amazon Bedrock service page

- Agents for Amazon Bedrock (AWS News Blog)

- Agents for Amazon Bedrock documentation

- Amazon Bedrock model providers

- Knowledge Bases for Amazon Bedrock

- Guardrails for Amazon Bedrock

- Use Amazon Bedrock with Amazon VPC

- Monitor Amazon Bedrock with Amazon CloudWatch

- Data protection in Amazon Bedrock

- Amazon Bedrock model evaluation

- AWS IAM best practices

“The fastest team doesn’t chase every new model; it ships thin slices weekly and swaps models when the data says so.”