Cut Latency, Costs: AWS Updates You Need Now

You’re shipping features fast, but the stack’s creaking in bad spots. Cold starts, flaky mobile links, and dev envs that die when a laptop shuts. Meanwhile, your CFO wants lower costs and more resilience. Fun combo, right.

Here’s the good news: AWS just handed you a cheat code. Load balance straight to Lambda. Health-check your serverless like an adult. Ship in Rust for speed. Run HTTP/3 over QUIC for faster mobile. And give iOS/macOS folks real cloud Macs with pixel-perfect remoting.

If you’re thinking “okay, what extra context do I need to decide smart?” you’re in the right place. You’ll get the why, examples, and a checklist you can paste in runbooks. Also in your “additional information” slots for exec decks. Let’s get you faster, cheaper, and harder to break.

We’ll turn shiny features into real moves. How ALB pairs with Lambda, where Rust and modern Java fit, and how QUIC helps folks on a train. No fluff. Just steps, watch-outs, and templates you can copy.

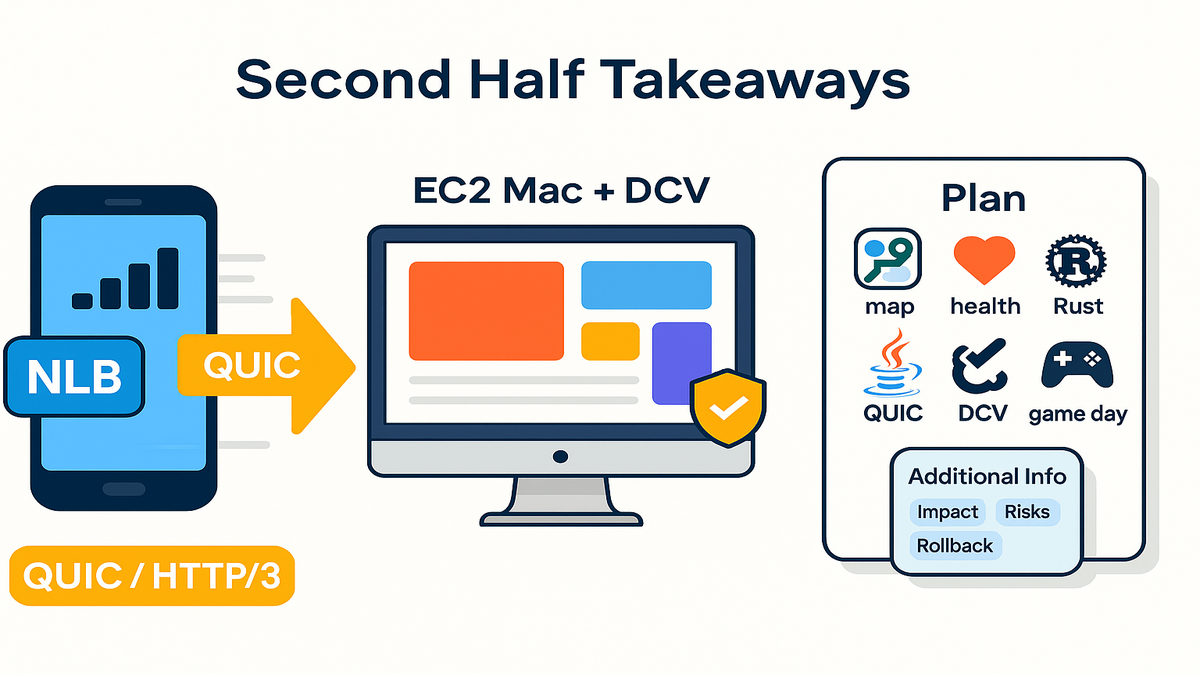

By the end, you’ll have a 9-step plan for two sprints. A clean failover playbook. And a tiny template to sell this to finance and security without a 40-slide deck. Sharp, simple, and ready to ship.

TL;DR

- Register AWS Lambda as targets behind an ALB to handle HTTP/S at scale without EC2.

- Turn on Lambda health checks and use Route 53 failover to dodge downtime.

- Build performance-first with the Rust runtime and modern Java support.

- Use QUIC/HTTP/3 on NLB paths for lower latency on mobile and streaming.

- Stream macOS desktops with Amazon DCV on EC2 Mac for real Apple workflows.

ALB targets Lambda

What changed

You can now put Lambda functions in an ALB target group. You handle HTTP/S traffic without spinning up EC2 or containers. Set function versions, aliases, and timeouts to control requests. ALB health checks work with Lambda too. So you can plug into Amazon Route 53 failover for high availability.

You also keep normal ALB features. Listeners, rules, auth actions, and WAF still apply. The request hits your function with method, path, headers, query string, and body. Your function returns a status code, headers, and a body. It feels like writing a tiny web server, without running servers.

Why it matters

This fuses serverless with battle-tested L7 load balancing. You keep ALB goodies like path routing, WAF, and auth. You ditch server hosting. For bursty stuff—webhooks, APIs, mobile backends, and scheduled fan-out—it’s a cost and ops win. You also get predictable behavior. ALB buffers requests, normalizes headers, and gives you a simple request context inside Lambda.

It also shrinks blast radius. Instead of a mix of EC2, containers, and Lambda, centralize HTTP/S entry on ALB. Apply WAF once. Route to function aliases for blue/green. That’s less surface for security and fewer moving parts on-call.

First hand example

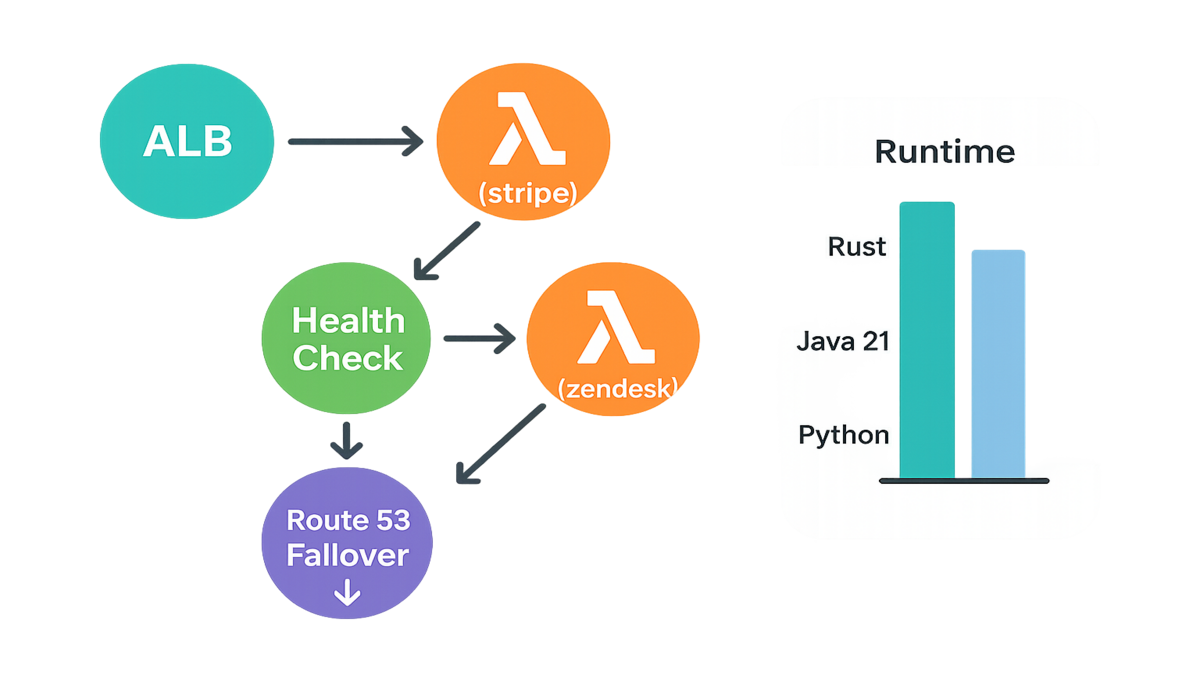

Say you ingest webhooks from 20 SaaS vendors. Skip a fleet of autoscaling instances. Put an ALB in front. Route /stripe to one Lambda alias and /zendesk to another. Use function aliases for phased rollouts. Set a 15-second timeout for low-latency hooks and 60 seconds for heavy transforms. If a function misbehaves, health checks mark it out. Route 53 then fails over to a standby region.

Go further. Ship JSON logs from each Lambda with vendor name and request ID. Push ALB access logs to S3. Wire CloudWatch alarms to unhealthy target counts. When Stripe changes a field at 2 a.m., you find it fast. Flip traffic to a safe alias while you patch.

Operational guardrails to copy

- Set per-route listener rules and per-function timeouts. Keep ALB and Lambda timeouts aligned.

- Use function aliases for safe rollouts. Shift a small percentage before going all in.

- Add WAF on the ALB for basics like SQLi and XSS rules, plus rate limits.

- Keep requests idempotent. If a webhook retries, your function should handle it.

- Use least-privilege IAM for Lambda. Avoid broad wildcards when you can.

- Log ALB access and Lambda execution logs. Tag everything with correlation IDs.

Cost and tradeoffs

- ALB gives flexible routing and WAF. That’s great for multi-tenant APIs and webhooks.

- API Gateway may still win for deep API features. Quotas, usage plans, and request validation. Mix and match if needed.

- For static files, keep S3 plus CloudFront. Don’t turn Lambda into a file server.

Links to get it done

Rust and modern Java

The performance play

Serverless costs love two things. Fast starts and low memory. Rust nails both. The AWS Lambda Rust runtime builds small compiled binaries with steady performance. For Java teams, modern LTS support like Java 21 and better tooling help tune memory. You reduce cold starts and keep developer speed.

Why it matters

- Rust: predictable latency, fewer runtime deps, smaller memory, and lower cost. Great for latency-sensitive endpoints, streaming transforms, and compute-heavy logic.

- Java: keep your ecosystem and frameworks. Upgrade to a current LTS. Trim reflection, warm critical paths in init, and right-size memory to avoid thrash.

First hand example

You have an image-processing microservice. The Python version is clean but struggles during spikes. Rewrite the hot loop in Rust, or the whole function in Rust. You cut average execution time and reduce memory usage. p95 latency stabilizes. For your Java API, upgrade to the latest LTS. Move startup work to init, cache config, and keep handler code hot. You’ll feel it in latency and the monthly bill.

Tuning checklist you can steal

- Use the smallest memory setting that meets p95 latency goals. Adjust until CPU-bound work keeps up.

- Put heavy SDK clients and connection pools outside the handler. Reuse them across invocations.

- For Java, keep reflection low and prefer AOT-friendly libraries when possible.

- Consider Provisioned Concurrency for paths that must stay warm 24/7. Scope it tightly.

- Where supported, try Lambda SnapStart for Java to snapshot init state.

- Test on arm64 Graviton for better price and perf. It’s a quick config flip.

Benchmark plan in one afternoon

- Pick one function with clear SLOs. Create a baseline using the current runtime.

- Build a Rust or tuned Java version. Same inputs and same outputs.

- Run a load test with cold starts and bursty traffic, plus steady-state.

- Track duration, memory used, p95 and p99 latency, and error rates.

- Pick the winner by latency and cost. Not just average time.

Links to ship faster

- Rust on AWS Lambda

- AWS Lambda supported runtimes for Java

- What’s New: AWS Lambda supports Java 21 LTS

QUIC on NLB

The protocol upgrade

QUIC, the transport under HTTP/3, runs over UDP. It reduces connection setup to 1-RTT and supports 0-RTT resumption. It also has better loss recovery than TCP. AWS Network Load Balancer supports UDP. You can front QUIC-based services and improve performance on lossy, mobile, or long routes.

QUIC is “a UDP-based, stream-multiplexed, secure transport protocol.” — IETF RFC 9000

Why it matters

Mobile and streaming users live with flaky networks. They switch between cell towers, Wi‑Fi, and 5G. TCP resets and TLS handshakes add pain. QUIC bakes in TLS 1.3, connection migration, and smarter congestion control. You get quicker starts, fewer drops, and better throughput under jitter.

First hand example

Imagine a real-time sports app. Your update feed and live comments ride over HTTP/3 and QUIC. Put an NLB in front of your UDP listeners. When users walk from subway to street-level 5G, QUIC keeps sessions alive. It uses connection migration instead of tearing down and re-handshaking. Result: updates feel instant, not laggy.

Rollout without breaking things

- Run dual-stack. Keep HTTP/2 over TCP and add HTTP/3 over QUIC. Let clients upgrade.

- Terminate QUIC at your service tier like Envoy, NGINX, or app servers with HTTP/3. NLB is UDP pass-through.

- Monitor both paths. Track latency, error rates, and upgrade rates to HTTP/3.

- Educate client teams. Mobile apps may need updated SDKs or gRPC and HTTP libraries.

Security notes

- QUIC uses TLS 1.3 under the hood. Keep certs and cipher suites modern.

- 0-RTT resumption is fast but can be replayed in some cases. Gate sensitive writes.

- UDP gets blocked in some corporate networks. Keep a clean HTTP/2 fallback.

Ops runbook starters

- NLB targets: autoscale the backend fleet and keep health checks strict.

- Observability: capture server-side HTTP/3 metrics like handshakes, loss, and RTT. Add VPC Flow Logs for UDP.

- SLOs: pick user metrics like time-to-first-update and stall counts. Watch them during rollout.

Links to be protocol smart

DCV on EC2 Mac

Real Apple workflows cloud scale

You can run macOS on EC2 Mac instances and stream the desktop with Amazon DCV. That means Xcode, Swift, iOS Simulator, and asset pipelines. All on elastic hardware with low-latency streaming and pixel-perfect rendering.

Why it matters

Distributed teams and heavy pipelines make laptops feel quaint. Compliance too. DCV’s remote protocol handles 2D UIs, high DPI, and GPU acceleration when supported. Your iOS team gets persistent, reproducible environments. Security sleeps better with centralized access, audit logs, and VPC-bound networking.

First hand example

Your mobile org standardizes on EC2 Mac for nightly builds and review envs. Designers connect to a known-good macOS image via DCV. They validate animations in the iOS Simulator and ship assets to S3. Developers spin up short-lived Mac hosts for tests. Then tear them down. No more queues for the big laptop.

Pilot this in a week

- Create a golden macOS image with Xcode, Homebrew, and your standard tools.

- Install and configure the DCV server. Validate audio, video, and clipboard.

- Put instances in a private subnet. Reach them via a bastion or SSM.

- Map IAM roles to S3 buckets for artifacts. Set up build caches.

- Define a simple scheduler to turn instances on and off during work hours.

Security and governance tips

- Lock access to your VPC. Use security groups and per-session audit logs.

- Prefer SSM Session Manager for admin actions. Avoid inbound SSH.

- Use separate images for prod signing vs. dev and test. Reduce risk.

- Encrypt volumes and keep snapshots of clean images for fast recovery.

What to measure

- Time-to-first-frame on DCV, input latency, and dropped frames.

- Build time reductions versus local laptops.

- Environment drift should be zero. Time to replace a bad machine should be minutes.

Links to launch

Additional information context

Why this section exists

Stakeholders don’t buy tech. They buy outcomes. The fastest way to win buy-in is adding sharp context. Treat this like the Additional Information box on an application. Short, surgical, and outcome-focused. If someone asks for an additional information context example, you’ve got one ready.

Reusable template you can paste

- Summary: We’re adopting ALB→Lambda to remove idle EC2. Health checks plus Route 53 failover will cut downtime.

- Impact: Target a 30–50% cost drop on webhook ingestion. p95 stabilizes under 300ms.

- Risks: Cold starts under burst. Mitigate with provisioned concurrency on hot paths.

- Dependencies: NLB UDP for QUIC endpoints. DCV for EC2 Mac developer workflows.

- Rollout: Blue/green via Lambda aliases. Region failover tested every quarter.

Examples across orgs

- Additional information context example, engineering: QUIC rides over UDP via NLB. Safer handoffs on flaky mobile.

- Additional information section example, finops: Moving to Rust reduces memory size. That lowers Lambda GB-seconds cost.

- Additional information context Oracle or Oracle Fusion: If your ERP layer sits on Oracle Fusion, document how ALB→Lambda adapters normalize headers and auth before Fusion APIs.

- Examples of additional information on college application—style analogy: short, specific, and relevant. Don’t write a novel. Give reviewers what they need to say yes.

Make it skimmable

- Lead with one sentence. Bold the result you promise.

- Add three bullets: impact, risk, rollback.

- Link to a one-pager with diagrams for anyone who wants more.

Quick pulse check

- ALB can route directly to Lambda with function aliases, timeouts, and health checks.

- Pair health checks with Route 53 failover to keep traffic up during incidents.

- Rust runtime and modern Java support give you faster starts and lower memory.

- QUIC over UDP via NLB improves latency and reliability for mobile and streaming.

- Amazon DCV on EC2 Mac unlocks real macOS dev at cloud scale.

This is a strong backbone. Resilient entry points, fast runtimes, modern transport, and reliable dev desktops. You’ll feel it in incident response, build speed, and user experience.

9 step plan

1) Map candidates for ALB→Lambda like webhooks and lightweight APIs. 2) Create Lambda target groups and listeners. Set sane timeouts by route. 3) Enable health checks. Wire Route 53 failover between regions. 4) Pick one Lambda to port to Rust. Benchmark memory and cold starts. 5) Upgrade Java functions to the latest supported LTS. Trim init and deps. 6) Identify a mobile or streaming path. Front it with NLB UDP for QUIC. 7) Stand up EC2 Mac plus DCV for a pilot iOS workflow. Capture metrics. 8) Document an additional information section with impact, risks, and rollback. 9) Run a game day. Failover, cold-start bursts, QUIC migration, and DCV login.

Implementation notes

- (1) List current endpoints. Tag by spiky versus steady traffic, latency needs, and dependencies.

- (2) Create an ALB listener rule per route. Use Lambda aliases in target groups to roll forward and back instantly.

- (3) Set health check success codes and thresholds. Add Route 53 health checks. Test full failover in staging.

- (4) Start small in Rust. Replace only the hot path. Measure p95s before and after.

- (5) On Java, move heavy init to static init, reduce classpath, and test SnapStart where supported.

- (6) For QUIC, add UDP on NLB and keep HTTP/2 fallback. Roll out to 10% first.

- (7) Build a golden EC2 Mac AMI. Document who can access DCV and how to request a seat.

- (8) Keep your additional information under 150 words. Add a rollback line.

- (9) Simulate bad days. Kill a region, spike cold starts, and drop to HTTP/2 when UDP is blocked. Take notes and fix gaps.

FAQ

ALB triggering Lambda

Yes. Create a Lambda target group, register your function version or alias, and attach rules to an ALB listener. The ALB invokes Lambda with the full request context. See: https://docs.aws.amazon.com/elasticloadbalancing/latest/application/lambda-functions.html

Lambda health checks

Configure success codes and thresholds per target group. If the function fails health checks, ALB marks it unhealthy and stops routing traffic. Combine with Amazon Route 53 failover so DNS shifts to a healthy region automatically. Docs: https://docs.aws.amazon.com/Route53/latest/DeveloperGuide/dns-failover.html

Timeouts and payloads

Set per-route timeouts in the ALB listener and function timeouts in Lambda. ALB supports request and response sizes based on service limits. For large uploads, use pre-signed S3 URLs and pass metadata through Lambda.

Load balancer for QUIC

QUIC runs over UDP. Network Load Balancer supports UDP pass-through, so terminate QUIC at your service tier. For HTTP/3 at the balancer layer, check service support. NLB handles transport while your stack implements HTTP/3.

Rust cost savings

Rust builds small, fast binaries with low memory. Lower memory cuts GB-seconds cost. Faster execution reduces billed duration. You also get steadier p95 latency, which avoids over-provisioning provisioned concurrency.

DCV replacing local Macs

For many workflows, yes. Builds, reviews, and asset prep are great fits. DCV streams a responsive macOS desktop, centralizes management, and scales up or down. For device-heavy tasks, keep a device lab in the loop.

ALB vs API Gateway

Use ALB when you want L7 load balancing and path routing with WAF and simple HTTP/S. Use API Gateway when you need deep API features like per-key throttling, usage plans, built-in authorizers, or request validation.

Auth in front of Lambda

Use ALB authentication actions with Amazon Cognito or an OIDC provider. Users get authenticated at the edge before Lambda runs. This keeps auth logic centralized and consistent.

Multi region state tips

Keep the data layer ready to shift regions. Use multi-Region storage patterns like async replication. Keep caches warm in the standby region. Design idempotent writes for eventual consistency during failovers.

UDP blocks and QUIC

Keep an HTTP/2 over TCP fallback. Most clients try HTTP/3 first, then drop back if UDP is blocked. Monitor the split so you know how much traffic is on QUIC.

x86 vs arm64

Test both. arm64 Graviton often gives better price and performance for CPU-bound work. Switching is usually just changing the architecture setting and rebuilding your package.

Lower cold starts

Start with the big rocks. Move heavy init outside the handler, shrink dependencies, and upgrade Java to a better-startup LTS. Consider Provisioned Concurrency on hot paths. Measure before and after so you can prove gains.

You want the fastest path to value? Pair ALB→Lambda for HTTP/S, failover with Route 53, and push performance-critical code into Rust or tuned Java. Put QUIC over NLB where mobile users feel it. Give your Apple team DCV-powered EC2 Macs so dev envs stop being the bottleneck. Then write a crisp additional information section that calls your shot on impact, risks, and rollbacks. That’s how you get sign-off and ship.

“Blockbuster laughed at Netflix. Your latency won’t laugh back.”

References

- ALB plus Lambda target groups

- Route 53 failover routing

- AWS Lambda runtimes for Rust and Java

- Rust on AWS Lambda developer guide

- AWS What’s New: Lambda supports Java 21 LTS

- Provisioned Concurrency for AWS Lambda

- AWS Lambda SnapStart

- Configure memory and CPU for Lambda

- Using arm64 Graviton with Lambda

- Amazon S3 pre-signed URLs

- Network Load Balancer protocols

- QUIC Core Protocol RFC 9000

- Using TLS to secure QUIC RFC 9001

- HTTP/3 RFC 9114

- Envoy HTTP/3 guide

- NGINX HTTP/3 module

- AWS WAF developer guide

- Authenticate users with an Application Load Balancer

- ALB access logs

- AWS X-Ray developer guide

- Amazon DCV

- EC2 Mac instances

- EC2 Mac user guide

- AWS Systems Manager Session Manager