Decode AWS Secret-West: Context, Playbook, Next Steps

You’ve probably seen the chatter already across Slack, emails, and random forums. “AWS Secret‑West” keeps popping in screenshots, tickets, and spicy aws secret west region reddit threads. Cue the wild theories for a minute, because folks love mysteries. Is Amazon quietly lighting up a new classified region out west? Or is it just cloud telephone, with half truths bouncing between acronyms and redacted PDFs?

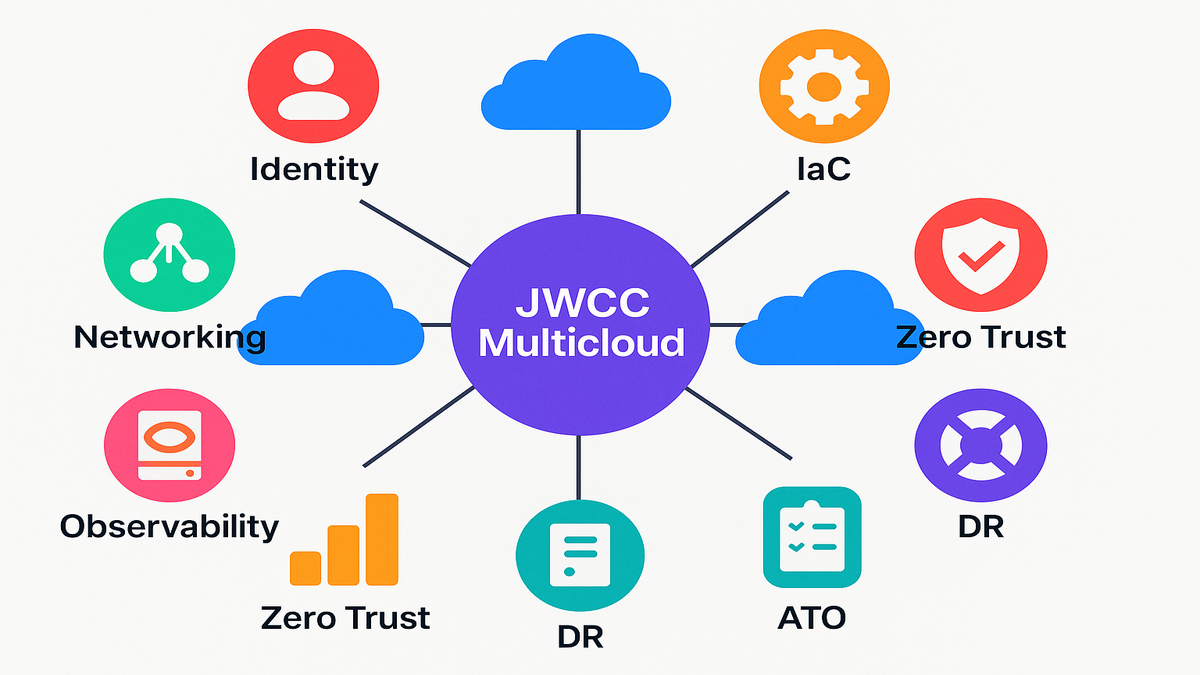

Here’s the real talk for today, classified clouds are expanding fast across the board. US agencies are moving to multicloud under JWCC with a $9B ceiling. That shift pulls capacity closer to missions, warfighters, and analysts who hate latency. Whether or not a specific “Secret‑West” gets named, the direction is pretty obvious. Expect more regions, more zones, more redundancy, and tighter latency budgets up high.

If you build for government, defense, or contractors, rumors won’t help you. You need a playbook that explains the tiers and gets your workloads ready.

TLDR

- “Secret‑West” likely signals more classified capacity, not a random codename tossed around.

- AWS runs a Secret Region announced in 2017 and supports Top Secret workloads.

- Azure Government Top Secret is GA now and competing hard on the high side.

- JWCC pushes multicloud and regional diversity across tiers and classification levels.

- Prep now with IaC parity, zero‑trust controls, gates for data, and SCIF‑aware ops.

Secret West signals

What the name implies

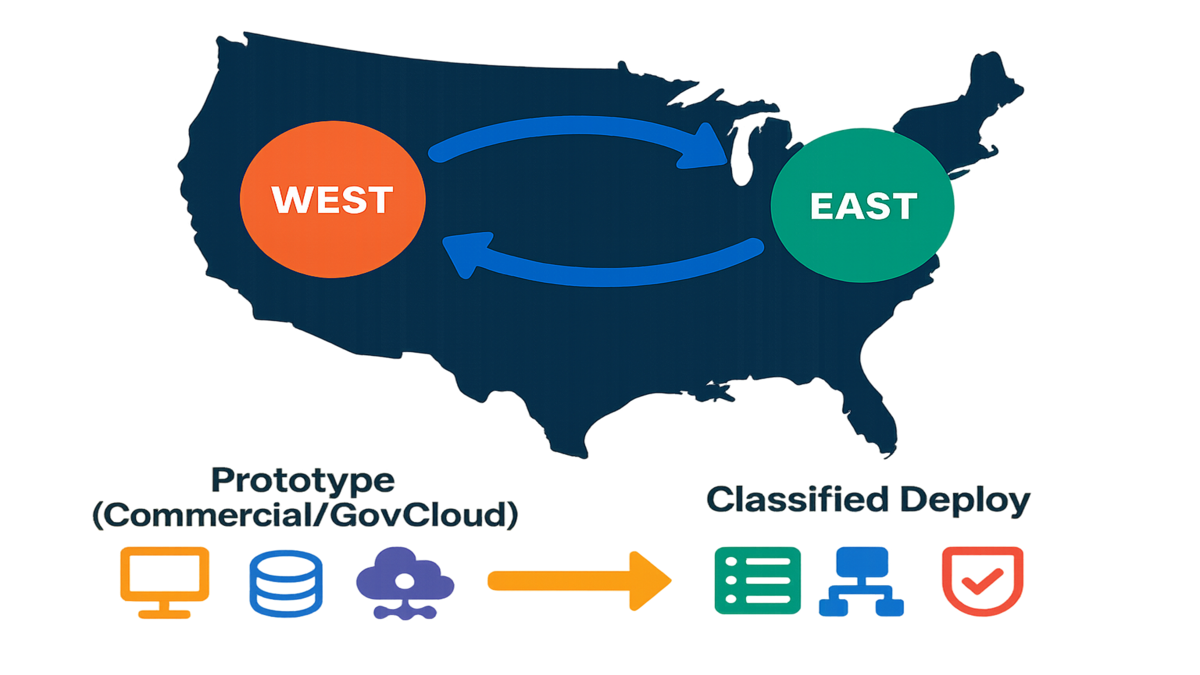

When you see “Secret‑West,” it usually points to a Secret‑level AWS region. It’s likely in the western US to cut latency for western commands and agencies. Contractors also benefit when the region is closer to their teams and sites. It also suggests region redundancy with a classic East and West pairing. That pattern supports continuity and disaster recovery without painful cross‑country dependencies.

Think of it like a local gym opening closer to your house. You’ll go more often because it’s nearby and easier to reach daily. Missions act the same with compute, using more live workloads when regions are close. They run harder when services are reliable and the catalog actually fits needs.

What not to assume

Don’t assume service parity with commercial regions or easy self‑enrollment by console click. Access is gated by clearances, sponsor approvals, and specific classified networks you must use. Names can be shorthand in tickets, and internal labels often differ from marketing. Also, don’t expect the same release pace you see in commercial land. Classified regions have extra controls, isolation, and heavy accreditation that slows features. New shiny things will lag a bit, and that’s normal, not a warning.

Practical example to copy

Your team runs a geo‑distributed analytics pipeline that will handle controlled or classified data. Prototype it in commercial AWS using GovCloud‑compatible services like KMS and PrivateLink. Use VPC endpoints, S3 Object Lock, and org‑level CloudTrail for strong audit trails. Package everything as IaC with Terraform or CloudFormation modules you can reuse. Enforce a data classification step, only sanitized datasets may run in commercial. When ready, redeploy the same stack into a classified region with no internet egress. Add cross‑domain controls and keep code changes minimal while boosting compliance. Zooming out, your game plan stays simple and very boring by design.

- Build once using core primitives, and avoid managed lock‑ins without proven parity guarantees.

- Treat every external call as a future exception and prefer private endpoints, internal repos.

- Bake audit artifacts into your pipeline now, with attestations, SBOMs, and evidence packs, so you’re not scrambling later.

Why this matters right now

Classified workloads are going real‑time now, not batch jobs next week. Think ISR feeds, digital engineering, LLMs on sensitive data, and tactical edge sync. Every millisecond matters, and western capacity reduces hops and improves replication windows. That also lowers RTO and RPO for missions that simply cannot wait. Latency wins translate to human outcomes during fires and messy logistics disruptions. Less time waiting on cross‑country file transfers when the network hiccups a bit. Pro tip: measure data flows in milliseconds and megabytes per second, not vibes. Treat latency budgets and replication windows as first‑class requirements in design docs.

AWS classified stack

The basic map

- GovCloud in US‑East and US‑West handles CUI and ITAR workloads with public docs, widely used.

- AWS Secret Region launched in 2017 for U.S. government Secret‑level workloads only.

- AWS also supports Top Secret workloads under C2S and C2E frameworks, details stay private.

Here’s a mental model that travels well across briefs and meetings. GovCloud is your on‑ramp for CUI and ITAR, while Secret and Top Secret are highways with guardrails. Expect badges and roadblocks you must preclear before you even merge.

What SC2S means

You might see “aws sc2s” used as shorthand for Secret‑level classified environments. Acronyms vary by program, and public documents stay vague on purpose honestly. Safest model is this: SC2S refers to the Secret tier, not any consumer service. If you’re mapping requirements, treat SC2S like a target environment with policy constraints. Don’t overfit to the acronym, focus instead on isolation, auditability, and least privilege.

Secrets Manager vs Region

AWS Secrets Manager is a commercial service for storing and rotating keys and passwords. It is not a gateway into any classified environment, despite the familiar name. You’ll still use KMS, Secrets Manager, and parameter stores for secret hygiene in GovCloud and commercial environments. In classified regions, similar patterns exist, but names, endpoints, and approvals differ. Takeaway is simple, design secret hygiene as a pattern, not tied to products. Rotate on schedules, use CMKs with separation of duties, and forbid plaintext handling. Those practices should follow workloads regardless of which cloud label you choose.

Parity and gaps

Service catalogs in classified regions usually lag commercial launches by months, sometimes longer. Some managed services will be missing or arrive later, and that is normal. Your job is to design for portability first, not perfect catalog checkboxes. Focus on the boring building blocks that travel across environments well.

- Core compute primitives like EC2 and containers with ECS or EKS for portability.

- Storage primitives including S3‑like object stores, block volumes, and reliable snapshot strategies.

- Networking minima such as VPCs, private endpoints, DNS, and load balancers that behave.

- Observability that works offline using self‑hosted OpenSearch or ELK plus SIEM collectors.

First‑hand example: one defense contractor packaged their ML feature store as a simple REST service. It ran on containers with an object store, avoiding managed ML dependencies and moving fast. When moved to a classified region, the deploy took a day instead of a quarter. A few more patterns that travel well across regions and vendors in practice.

- DIY queues and streams when needed, containerize a broker if managed streaming lags, and scope it tightly.

- Use lightweight feature flags to toggle behavior when a managed dependency is missing.

- Set explicit quotas and backpressure because capacity is precious, throttle kindly and fail soft.

Why capacity moves west

Latency is mission requirement

Imagine fusing wildfire intel, satellite passes, and air assets across the western US. An east‑only Secret region adds milliseconds and brittle cross‑country links that break. A western region collapses latency, shrinks jitter, and makes live operations actually feasible. Treat latency like fuel consumption, you can only go so far before refueling. Local capacity means your tactical loops can finally close on time.

Continuity and regional independence

Agencies demand multi‑region failover with independence between failure domains and networks. East and West pairs enable a few standard patterns that actually work.

- Active and active or pilot‑light strategies even across classification boundaries when permitted.

- Region‑isolated blast radius for faults, maintenance windows, or ugly network partitions.

- Compliance with continuity mandates without needing to rebuild the entire world again.

The DoD’s JWCC frames the direction with multiple vendors, regions, and classified tiers. It sits under one contract vehicle and points hard toward scalable delivery. The award notes a ceiling of nine billion dollars, which signals real scale. Here are practical continuity tips you can actually implement this quarter.

- Keep state replication boring and tested with snapshots and object storage versioning.

- Choose DR patterns per workload; many survive on pilot‑light instead of full active.

- Run game days by killing a region in staging and watching dashboards honestly.

Data gravity and edge sync

More sensors, more models, and much more data are arriving every month. When edge devices in SCIFs or tactical kits collect streams, they need closer regions. Those regions handle sync, storage, and retraining with fewer delays and headaches. Western capacity cuts replication windows and reduces operator pain during peak operations. Think hard about disaster response season and all those bursty edge pipelines. Your end‑to‑end pattern probably looks like this when done correctly.

- Cache at the edge using durable queues that can survive rough links.

- Compress and encrypt in place with CMKs and envelope encryption patterns.

- Sync over private circuits when allowed, and batch transfers when bandwidth dips.

First hand field pattern

Teams pair rugged edge compute like Snowball Edge with on‑prem isolation and later sync. They sync to a nearby region and keep operations steady during network issues. Add Direct Connect or private transport where allowed to stabilize and harden links. Extra credit: build a brownout mode that degrades gracefully when replication lags. Serve stale but acceptable data with clear labels until the pipes catch up.

Azure Top Secret multicloud

Microsoft high side posture

Microsoft made Azure Government Top Secret generally available across multiple separated regions. Translation here is simple, competition is active at the Top Secret tier. You get better service velocity, more regional choice, and pressure on price‑performance. This signals a cultural shift; high‑side cloud isn’t a one‑lane road anymore. Program offices can ask for options, and engineers can push problem‑fit services. Not just whatever the contract vehicle happens to offer that quarter.

JWCC and the multicloud chessboard

JWCC brings AWS, Microsoft, Google, and Oracle under one vehicle across classifications. Program offices can mix vendors by workload like imagery, logistics, or cross‑domain transfer. Design your architecture assuming multicloud coordination is normal, not a rare exception. Multicloud doesn’t mean double the work, it means shared rules across providers.

- One policy set expressed once, applied cleanly across multiple different cloud providers.

- Shared tagging and labeling taxonomy so metadata travels with assets everywhere automatically.

- One evidence story that maps cleanly to every environment without rewriting audits.

Interop reality to design

Here’s the interop reality you should design for across clouds and tiers.

- Identity: federate with your IdP, enforce hardware‑backed MFA, and use short‑lived tokens.

- Networking: private endpoints, no default internet egress, and plan for cross‑domain solutions (CDS).

- Storage: data labeling and object‑level retention, immutable logs as compliance controls.

- Observability: vendor‑neutral telemetry exported via OTel, stored where your ATO allows.

Multicloud without tears

One program split geospatial preprocessing and model serving across vendors to match GPU supply. They normalized contracts with IaC and used a single tagging taxonomy across everything. Tags covered classification, ownership, and data type with consistent names and values. They enforced data egress using a human‑in‑the‑loop cross‑domain workflow for approvals. Result was mission‑first, vendor‑agnostic, and very audit‑friendly when inspectors knocked. One more tip, keep a shared incident runbook that spans providers and teams. Breaches don’t care which logo you used, and your playbook shouldn’t either.

Pulse check recap

- “Secret‑West” chatter usually signals more classified capacity closer to western missions emerging.

- AWS has distinct tiers like GovCloud, Secret Region, and Top Secret capabilities.

- Parity lags in classified regions, so design for primitives and easy portability.

- Azure Government Top Secret is live, and multicloud is table stakes under JWCC.

- Prep now with IaC, zero‑trust, labeling, and SCIF‑aware operational patterns across teams.

Stop guessing codenames and build evidence‑backed stacks ready to move up classification fast.

Build for up classification

Lock in your compliance baseline

Map your controls to NIST 800‑53 and 800‑171 starting today, not tomorrow. Even handling only CUI, a 53 and 171 baseline with FedRAMP High shrinks gaps. Bake controls into pipelines with static analysis, SBOMs, signed artifacts, and policy checks. Helpful anchors you can use without arguing for budget forever:

- Use CIS Benchmarks and DISA STIGs to harden OS images and container baselines.

- Generate machine‑readable attestations for every build with who, what, when, and dependencies.

- Store evidence in places auditors can find fast without spelunking through drives.

Architect for no internet assumptions

Design like there is zero public egress forever, because sometimes there is. Use VPC endpoints, internal DNS, private registries, and on‑box scanning for safety. Mirror third‑party artifacts inside private repos and earn any outbound exceptions. Your offline‑first checklist looks something like this for most teams:

- Artifact mirrors for packages, containers, and models that your builds depend upon.

- Internal certificate authority and automated certificate lifecycle for every service and host.

- On‑prem and in‑region cache layers to smooth outages and rough network weather.

Data classification is a gate

Add structured labels on every object and dataset like owner, classification, and retention. Include handling caveats too, and enforce them both in code and storage. Block writes that drop higher classification data into lower environments automatically. Automate quarantines and alerts for mislabels before anything leaks by mistake. Bonus pattern, refuse to serve unlabeled data as a strict default rule. Your future self will thank you loudly during an audit with less stress.

Secrets and keys rotation

Whether commercial or classified, auto‑rotate credentials and use customer‑managed KMS keys. Maintain separation of duties and deny plaintext secret egress in CI and CD. Assume credentials will leak someday and build strict blast‑radius limits everywhere. Add break‑glass with audited and time‑bound elevation paths when needed only. Nobody keeps forever admin, not even the nicest senior engineer honestly.

ATO accelerator example

A contractor built a reference architecture with machine‑checked controls and a tagging policy. They shipped hardened AMIs with drift detection baked in for repeatable deployments. When a classified opportunity opened, they deployed with verifiable evidence packs instead. That shaved months off their Authority to Operate timeline without begging for exceptions. Copy the pattern below and adapt it to your program immediately.

- Pre‑bake compliant images and sign them with a trusted chain of custody.

- Version your policy‑as‑code right alongside your IaC so changes stay traceable.

- Keep a living traceability matrix that updates itself straight from your pipeline.

People and places

Classified cloud is still human work with real people and real places involved. Invest in cleared talent, SCIF runbooks, and incident response that works offline. Train teams on CDS and data handling, plus awkward air‑gapped KVM screensharing. Also define change windows that match mission tempo and real operational rhythms. The wrong patch at the wrong hour becomes an outage with a badge.

Red team your assumptions

Run drills that break things on purpose so you can confirm real resilience.

- Kill the internet path and attempt a deploy, note anything breaking that shouldn’t.

- Rotate a critical key on a Friday and see if your app survives.

- Simulate a misclassification and confirm quarantine triggers and alerts reach the right humans.

If you can’t run these safely in staging, you are not ready yet.

FAQ

Q: What is “AWS Secret‑West,” officially speaking, if we’re being precise here? A: It is not an officially public product name listed anywhere by AWS. In context, it likely means a western U.S. region serving Secret‑classified workloads. Classified region details rarely appear on public region pages, and naming can differ.

Q: How is AWS Secret Region different from AWS GovCloud in practice? A: GovCloud supports sensitive but unclassified and ITAR workloads with public onboarding. Secret Region supports Secret‑classified workloads for authorized customers with clearances and networks. Different bars and very different access paths for obvious reasons honestly.

Q: What does “aws sc2s” actually mean in these discussions? A: It commonly refers to Secret‑level AWS environments, often Secret Commercial Cloud Services. Precise naming varies by program, and details stay intentionally sparse in public. Treat it as shorthand for the Secret tier, not any consumer service.

Q: Is AWS Secrets Manager related to Secret or Top Secret regions somehow? A: Secrets Manager stores and rotates secrets in commercial and GovCloud environments. Classified regions have similar patterns, but access, endpoints, and approvals differ. Don’t confuse a product name with a data classification level ever.

Q: Can a commercial company get into a classified region for real work? A: Only with proper clearances, sponsorship, and a genuine mission need behind it. Many prototype in commercial or GovCloud, then transition once authorized and connected.

Q: Is Azure Government Top Secret the same as AWS Top Secret capabilities? A: They solve similar problems but remain distinct platforms with different catalogs. Under JWCC, some programs may use both across workloads depending on needs.

Q: How do DoD impact levels map to classification in broad strokes? A: Roughly, IL5 covers many CUI workloads and IL6 addresses Secret needs. Always confirm boundaries with the DoD Cloud Computing SRG and your authorizing official.

Q: Should I expect GPU scarcity in classified regions when planning capacity? A: Plan for constrained quotas and design schedulers with sensible backoff strategies. Normalize model serving so you can move workloads where approved capacity exists.

Pre clearance build steps

- Define a control baseline in policy‑as‑code using NIST 800‑53, 800‑171, FedRAMP High.

- Standardize IaC modules for network, compute, and storage with zero internet egress.

- Implement end‑to‑end tagging for data classification and enforce it strictly at write time.

- Centralize logs and make them immutable with Object Lock and signed attestations.

- Mirror software artifacts internally, and sign and verify everything within CI and CD.

- Use customer‑managed KMS keys, rotate secrets automatically, and enforce least‑privilege IAM.

- Build SCIF ops runbooks and CDS transfer playbooks with human‑in‑the‑loop approvals.

- Test disaster recovery across regions and aim for active‑active or pilot‑light patterns.

- Validate observability via OTel, keeping dashboards and alerts useful completely offline.

- Document evidence packs mapping controls to components for faster ATO reviews later.

30 60 90 day glidepath

- 30 days: freeze a control baseline, tag taxonomy, IaC skeleton, and private artifact mirrors.

- 60 days: enforce write‑time classification, add immutable logs, and test zero‑internet deployment.

- 90 days: run a DR game day, produce evidence pack dry runs, and brief leadership.

Here’s a closing gut‑check that actually matters for your next boards. If someone unplugged the internet from your VPCs, would your critical workloads run? Could they still deploy and be observed cleanly without outside help at all? That is the standard you’ll need to meet sooner rather than later.

If you’re still wondering whether “Secret‑West” is real, focus on the bigger trend. Classified clouds are moving closer to missions, more redundant, and far more competitive. Your edge isn’t guessing codenames, it’s portable and compliance‑first architecture that deploys. Drop into any authorized environment and convert rumors into calm lead time.

References

- AWS Public Sector Blog: Introducing the AWS Secret Region (2017)

- AWS GovCloud (US) overview

- U.S. Department of Defense: JWCC contract announcement (“ceiling of $9 billion”)

- Microsoft: Azure Government Top Secret now generally available

- NIST SP 800-53 Rev. 5 (security and privacy controls)

- NIST SP 800-171 Rev. 2 (CUI protection)

- FedRAMP program

- AWS Snowball Edge (for edge/SCIF-style patterns)

- AWS Key Management Service

- AWS Secrets Manager

- DoD Cloud Computing Security Requirements Guide (SRG)

- CISA Zero Trust Maturity Model (v2)

- OMB M-22-09: Federal Zero Trust Strategy

- NARA CUI Registry

- DISA Security Technical Implementation Guides (STIGs)

- OpenTelemetry

- AWS PrivateLink

- AWS Interface VPC Endpoints

- Executive Order 14028 on Improving the Nation’s Cybersecurity