Secure Amazon MQ fast with HTTP auth and mTLS

Your message bus is probably more exposed than you think. Credentials sit in repos. Tokens leak in logs. One stale password can still make headlines.

Here’s the good news: Amazon MQ for RabbitMQ just made lock down easier. You can now use HTTP-based authentication for brokers via configuration updates. And if you’re serious about zero trust, certificate-based mutual TLS (mTLS) is ready too.

Translation: centralize API authentication and authorization for Amazon MQ, with short-lived access. Tie everything back to your identity provider, clean and simple. No more long-lived broker users drifting forever. No more “who gave this microservice admin?” shocks.

If you’re already thinking OAuth 2.0 flows, service trust, and compliance lists, you’re set. This update slides right into your architecture without drama.

We’re going to cover what these features unlock, plus a lean HTTP auth service. We’ll show where mTLS fits, and how to ship to prod cleanly. No added latency, no 3 a.m. PagerDuty pings.

Spoiler: done right, it’s faster, safer, and far more auditable. Way better than juggling passwords on every single broker.

TLDR

- Amazon MQ for RabbitMQ now supports HTTP-based authentication and certificate-based mTLS.

- Use an HTTP auth service to centralize API authentication and authorization for Amazon MQ.

- Bring your own identity: validate JWTs or OAuth 2.0 tokens in your auth service.

- mTLS adds strong client identity and pairs well with HTTP auth for defense-in-depth.

- Keep it fast: cache decisions, deploy auth near brokers, and add circuit breakers.

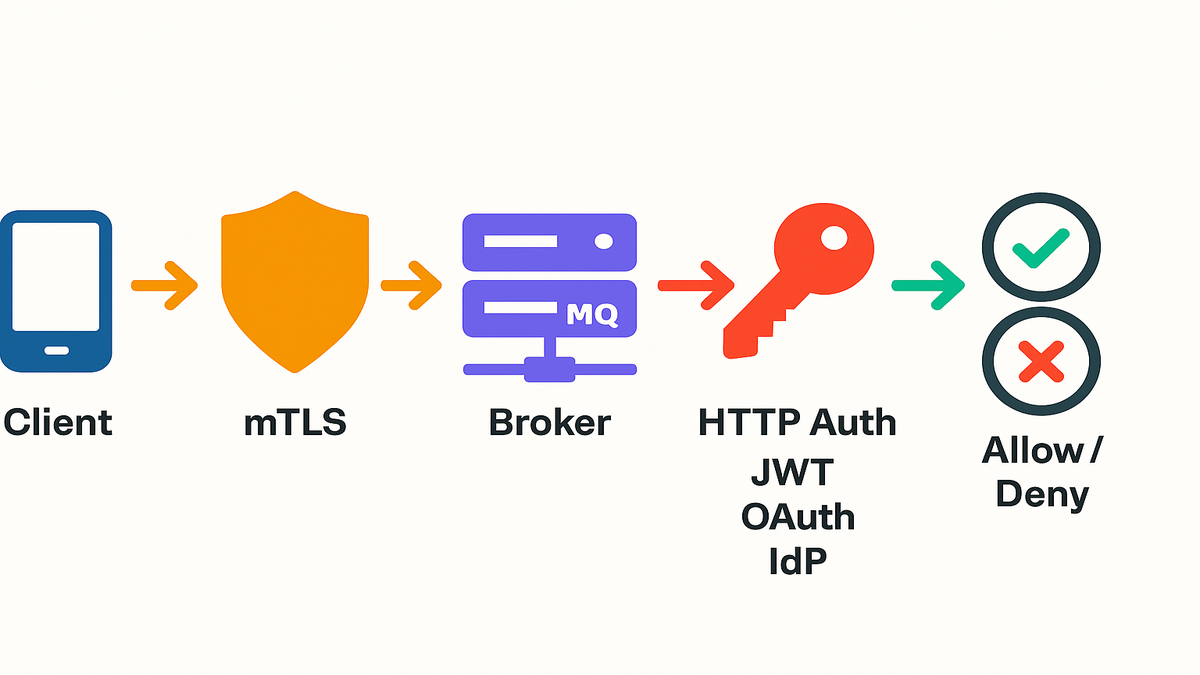

Amazon MQ HTTP auth mTLS

The short version

Amazon MQ for RabbitMQ now supports two enterprise-grade knobs you wanted for years:

HTTP-based authentication and authorization: Your broker asks your HTTP endpoint. It says, “who are you and what can you do?” You validate credentials, check claims, and return fine-grained permissions. Now you can unify policy across services, instead of babysitting per-broker users.

Certificate-based mutual TLS (mTLS): Clients present certificates during the TLS handshake. The broker verifies the cert against a trusted CA. If it’s valid, the connection is set before any tokens are even discussed.

In practice, you can run your own auth brain with this. Want OAuth 2.0 authentication and authorization for Amazon MQ? Validate a JWT, map scopes or claims to RabbitMQ rights, then return allow or deny. Want a stricter posture? Require mTLS for all producers and consumers, and still use HTTP auth for fine-grained rights. It’s layered security without coordination pain.

What this unlocks

- Single place for policy: One service decides who can connect and do what. It controls vhosts, exchanges, and queues. No more policy drift between environments.

- Short-lived credentials by default: Use tokens that expire in minutes, not passwords that linger.

- Easier offboarding: Disable a role or revoke a cert, and access drops instantly across brokers.

- Portable identity: Bring your IdP (Okta, Auth0, Cognito, Azure AD) via JWT validation or introspection. No broker plugin required.

- Cleaner multi-tenant isolation: Align vhosts to tenants and enforce routing-key prefixes from claims.

Why this matters now

- Consolidation: Centralize auth logic. Stop scattering credentials across brokers.

- Auditability: Every decision gets logged by your HTTP auth service and the broker.

- Least privilege: Map roles to exchanges, queues, and vhosts. Even down to routing keys.

- Zero trust: mTLS gives strong client identity from the first packet.

Real-world example: You run a payments platform under PCI scope. Producers use mTLS certs from your private CA, so you trust the workload. Your HTTP backend validates short-lived OAuth tokens on each publish. It checks the merchant_id claim against allowed routing keys, then returns precise “can publish to exchange X with routing key Y” decisions. Clean, auditable, and hard to abuse.

This pattern matches breach data today. Stolen or misused credentials still drive many incidents. Cut long-lived secrets and add cryptographic identity at transport. You’ll shrink that risk surface in a real way.

Design your HTTP backend

Implementation sketch

Think of HTTP-based authentication as a broker-to-API handshake. The broker forwards presented credentials to your endpoint. That could be username and password, a bearer token, or cert details. Your service checks identity and entitlements, then returns a decision and permissions. That’s your control plane for Amazon MQ for RabbitMQ authentication and authorization.

A pragmatic approach:

- Token validation: If you use OAuth 2.0, verify JWTs locally. Check issuer, audience, signature, and expiration. Or call your IdP’s introspection endpoint when needed. Use scopes and claims like role, tenantid, or merchantid to drive permissions.

- Identity stitching: If you enable mTLS, bind the client certificate subject or SAN to a service identity. Optionally require the JWT’s subject to match the cert’s identity.

- Permissions model: Map identities to RabbitMQ vhosts, exchanges, queues, and routing keys. Keep it least-privilege every time. A common pattern is tenant-scoped vhosts and claim-scoped routing keys.

- Decision responses: Return allow or deny plus tags or permissions the broker can enforce. Keep the payload small and cacheable.

First-hand example: Your “mq-auth” service runs in the same VPC and AZs as brokers. It reads your JWKS for key rotation, caches public keys and policy decisions for 60–120 seconds, and exposes health endpoints. When the broker calls, you return explicit grants. Connect to vhost /acme, publish to exchange invoices, with routing key acme.*. Your SREs track deny rates and p95 decision latency.

To make this concrete, many teams structure a decision like this, conceptually:

- Input: connectionid, presentedtoken or username/password, and mTLS subject or SAN. Include requested action, target resource, and context like IP and time.

- Output: decision allow or deny, permissions like vhost and routing key patterns, and an expiry. Add a reason code for visibility and debugging.

Operational considerations for your auth API:

- Response headers: Set Cache-Control with a short max-age that matches your decision TTL. This hints safe caching by intermediaries.

- Health endpoints: Provide /health and /ready so brokers and load balancers route smartly.

- Idempotency and retries: Make decisions pure functions of inputs. Be chill about brief duplicate calls during connection storms.

- Time sync: Enforce small clock skew windows for exp and nbf. Keep NTP healthy everywhere.

Authorize like an adult

- Principle of least privilege: Default deny. Only grant the minimum exchanges, queues, and routing keys.

- Tenant boundaries: vhosts are your friend. Keep tenants isolated and cross-tenant routes explicit.

- Time-bounded access: Favor short-lived tokens over static passwords.

- Emergency e-stop: Add a kill switch in your auth service. Deny risky routes fast if you detect abuse.

Pro tip: Want OAuth 2.0 authentication and authorization for Amazon MQ, but no native token parsing? Do it in your HTTP backend. You control validation logic, so you can fit any IdP. No waiting for broker plugin support.

Policy modeling tips:

- Use policy templates by workload class like producer, consumer, and admin. Parameterize by tenant.

- Keep routing key wildcards tight. Prefer tenantA.invoices.* over tenantA...

- Version policies and store them as code. Push changes through CI/CD with approvals and tests.

Testing strategy:

- Write table-driven tests for decisions. Given claims X and request Y, expect decision Z.

- Include deny-first tests to prove “closed by default” holds under pressure.

- Add negative tests for expired tokens, wrong audience, and mismatched mTLS identity.

Common pitfalls to avoid:

- Overbroad audiences: JWTs minted for “api://*” make scope control fuzzy. Narrow them.

- Ignoring nbf and exp: Respect token lifetime. Don’t accept expired tokens silently.

- Hardcoding JWKs: Fetch and cache JWKS. Rotate keys without code deploys.

- Conflating OIDC and OAuth: OIDC handles identity. OAuth handles authorization. Use the right claims.

- Skipping correlation IDs: Without them, tracing decisions across systems is painful later.

Beyond passwords with mTLS

How mTLS works

With certificate-based mutual TLS, both sides present certs during the handshake. The broker verifies the client cert against a trusted CA. Only then does the connection proceed. The win is strong, cryptographic client identity before app credentials.

In Amazon MQ for RabbitMQ, enabling mTLS usually involves:

- Uploading or referencing a trusted CA bundle in your broker config.

- Requiring client certificate validation during TLS handshakes.

- Distributing client certs and private keys to approved workloads.

This is high-signal authentication that’s tough to phish. Pair mTLS with HTTP authorization for real power. mTLS proves the workload identity. Your HTTP backend enforces permissions using runtime context like token scopes and time-of-day.

First-hand example: Your ingestion service runs on EKS. Each deployment gets a unique mTLS cert from your private CA. Think AWS Certificate Manager Private CA for this. You roll certs every 30 days with a GitOps job. The broker only accepts connections signed by that CA. On connect, your HTTP backend still checks if that workload may publish to the high-priority exchange. Defense in depth done right.

Details that bite in prod:

- Subject Alternative Names: Prefer SANs over CN. Encode service identity in DNS or URI SAN fields you can parse.

- Chain completeness: Distribute intermediates so clients present a full chain. Partial chains cause weird failures.

- Revocation strategy: Favor short lifetimes and automated rotation. CRLs or OCSP help but add complexity.

- Cipher suites and TLS versions: Set a modern baseline. Trim weak ciphers to satisfy compliance.

- Clock drift: Cert validity windows are strict. Keep NTP healthy in every node group.

Operational playbook

- Certificate lifecycle: Use an internal CA like ACM Private CA to automate issuance and renewal. Keep rotation under 90 days; 30 is better.

- Secure storage: Deliver certs and keys via a secure channel. Use Secrets Manager, sealed secrets, or a sidecar. Limit filesystem exposure.

- Revocation: Maintain a revocation path. If a key leaks, yank the cert and update trust. Cut access immediately.

- Observability: Export TLS client identity into connection metadata and logs. You should trace who did what.

Compliance note: mTLS strengthens “strong auth for non-human identities” in SOC 2 and ISO 27001. It helps in PCI too. Add short-lived tokens in your HTTP backend for layered controls that auditors appreciate.

Practical enhancements:

- Service identity standard: Define a naming scheme like spiffe://org/env/service. Make mappings predictable.

- Drift detection: Scan connected clients and verify their cert subjects match real workloads.

- Blue or green rotations: Issue new certs in parallel, flip clients, then remove old roots. Avoid downtime.

Ship to prod

Keep it fast

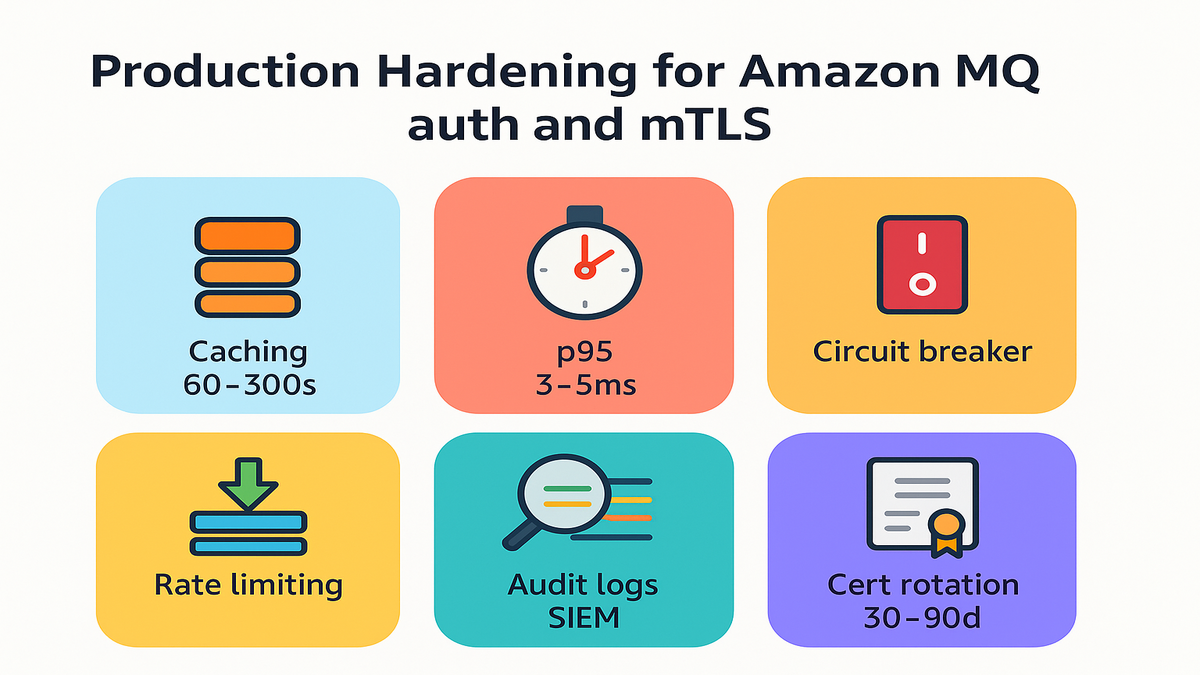

Your HTTP auth service should sit close to the broker. Same region and, ideally, same AZs. Latency matters because the broker will call it during connects and checks.

Performance guardrails:

- Caching: Cache token keys like JWKS for hours. Cache authorization decisions for 60–300 seconds. Invalidate on policy changes.

- Circuit breaking: If the auth service degrades, have clear failure behavior. Safer default is fail-closed for critical routes. Consider a limited fail-open for read-only consumers if business continuity demands it. Document that exception.

- Horizontal scale: Use autoscaling and track p95 and p99 latency. Keep p95 decision time low. Make it a rounding error on connection setup.

- Rate limiting: Protect your auth service from floods. Rate limit per broker, per source, and per client identity.

- IdP dependencies: If you introspect tokens, cache responses hard and set strict timeouts. Prefer local JWT verification when you can.

First-hand example: One team colocated two auth API replicas behind an internal NLB. They measured p95 at around 3–5 ms. They also cut connection storms by pre-warming client pools. A small LRU cache of decisions per identity slashed repeat-check latency.

Warm-up strategies that help:

- Preload JWKS and policy caches on startup. Avoid cold misses during deploys.

- Stagger client restarts to avoid thundering herds after deploys or failovers.

- Reuse connections at the client level to reduce connect churn.

Capacity and resilience checklist:

- Load test connect and publish bursts that mirror real deploys.

- Define SLOs like p95 decision under 10 ms and error rate under 0.1%.

- Measure broker retry behavior so you can spot auth failures versus backpressure.

- Keep dashboards for decision latency, deny rate by reason, and cache hit ratio.

Prove it to auditors

- Correlate everything: Log a connectionid or correlationid in broker logs and your auth service. Tie decisions to identities and presented claims.

- Immutable logs: Ship logs to a write-once bucket or a SIEM with tamper-evident storage.

- Deny analytics: Track deny reasons like expired token or invalid cert. Help your security team spot patterns fast.

- Change control: Version your policies. When you change publish rights, know when and why.

Reference point: Verizon’s DBIR keeps flagging stolen credentials and privilege misuse. Cut long-lived credentials and add mTLS to reduce risk.

Evidence packet your auditors will love:

- Policy snapshots at a point in time.

- Sample decision logs with correlation IDs, masked where needed.

- Certificate inventory with validity windows and rotation cadence.

- Test results for deny-first cases and incident drills like revocation and expiry.

Quick pulse check

- You centralized permissions in an HTTP auth service, not across brokers.

- Tokens are short-lived, validated locally, and mapped to least-privilege rights.

- mTLS is enabled for workloads that can support it, with automated rotation.

- Decision latency stays single-digit milliseconds and is tracked with SLOs.

- Logs correlate broker connections, token claims, and decisions for audits.

FAQ what teams ask

1) Does Amazon MQ for RabbitMQ support OAuth 2.0 natively?

Amazon MQ’s new capability is HTTP-based authentication. Your broker calls an HTTP endpoint you control. You can implement OAuth 2.0 authentication and authorization for Amazon MQ there by validating JWTs or using token introspection. You get OAuth benefits without native broker-side plugins.

2) Can I combine mTLS and HTTP-based auth?

Yes. Use mTLS for strong client identity at the transport layer. Then use HTTP-based authorization for fine-grained permissions. Many teams require both for defense-in-depth and clearer audit trails.

3) What’s the latency impact of HTTP-based authentication?

It depends on proximity and caching. Colocate the auth service with brokers. Cache keys and decisions. Keep p95 decision latency in low milliseconds. Measure during connection storms to avoid thundering herds.

4) How do I rotate client certificates without downtime?

Issue overlapping certs ahead of expiry. Update clients to present the new cert. Keep old and new roots trusted briefly. After cutover, remove the old trust chain and revoke the old certs. Automate this with CI/CD or an operator.

5) How should I model permissions for multi-tenant systems?

Use vhosts per tenant to isolate namespaces. Map claims like tenant_id and role to exchanges and queues within that tenant’s vhost. Restrict routing keys to a tenant prefix like tenantA.*. Deny cross-tenant routes unless explicitly required.

6) What logs do auditors expect?

Capture client identity like mTLS subject, token claims like subject and scopes, and the decision. Include resource details, reason code, and correlation_id. Store immutably and retain per your compliance regime.

Additional common questions

7) Do I still need IAM or security groups?

Yes. Think in layers. Network controls restrict where traffic comes from. mTLS authenticates the workload. HTTP auth decides what it can do. They work together.

8) What if a legacy client can’t do mTLS?

Start with HTTP-based authentication only, scoped rights, and short-lived tokens. Isolate legacy clients in their own vhost. Plan a path to mTLS soon.

9) How do I handle cross-environment access like dev to staging?

Avoid it by default. If needed, issue separate certs and audiences per environment. Deny cross-env routes in policy.

Turn it on fast

1) Inventory producers and consumers and group them by tenant and workload. 2) Stand up an internal HTTP auth API near your brokers. Add health checks and metrics. 3) Implement JWT validation or token introspection. Cache keys and claims smartly. 4) Map identities to vhosts, exchanges, queues, and routing keys with least privilege. 5) Enable HTTP-based authentication in your Amazon MQ for RabbitMQ configuration. 6) Enable mTLS. Load your trusted CA and distribute client certs securely. 7) Test happy-path and denial cases. Simulate deploy storms to be sure. 8) Add caching and circuit breakers. Set SLOs for p95 and p99 decision latency. 9) Wire logs to your SIEM with correlation IDs. Rehearse cert and token rotation.

Close the loop with chaos drills. Kill the auth service, revoke a cert, expire a token. Confirm the system fails safe.

You want a simple, verifiable story. Connections are authenticated at transport via mTLS. Actions are authorized centrally via HTTP. All decisions are logged and correlated. That’s it. This approach shrinks blast radius, raises the bar with crypto identity, and keeps you quick with policies as code.

The real unlock is cultural. Treat messaging like any protected API. Use short-lived credentials. Automate rotation. Track SLOs. Once the team lives that, turning on HTTP-based auth and mTLS isn’t a migration. It’s a real upgrade.

References

- Amazon MQ product page

- Amazon MQ documentation

- RabbitMQ official documentation

- Cloudflare Learning: What is mutual TLS (mTLS)?

- Verizon Data Breach Investigations Report (DBIR)

- RFC 6749: The OAuth 2.0 Authorization Framework

- RFC 7519: JSON Web Token (JWT)

- RFC 7662: OAuth 2.0 Token Introspection

- RFC 7517: JSON Web Key (JWK)

- AWS Certificate Manager Private CA

- Amazon S3 Object Lock

- SPIFFE and SPIRE

- NIST SP 800-63