Turn Reviews Into Revenue With Amazon Data Kiosk

Jacob Heinz

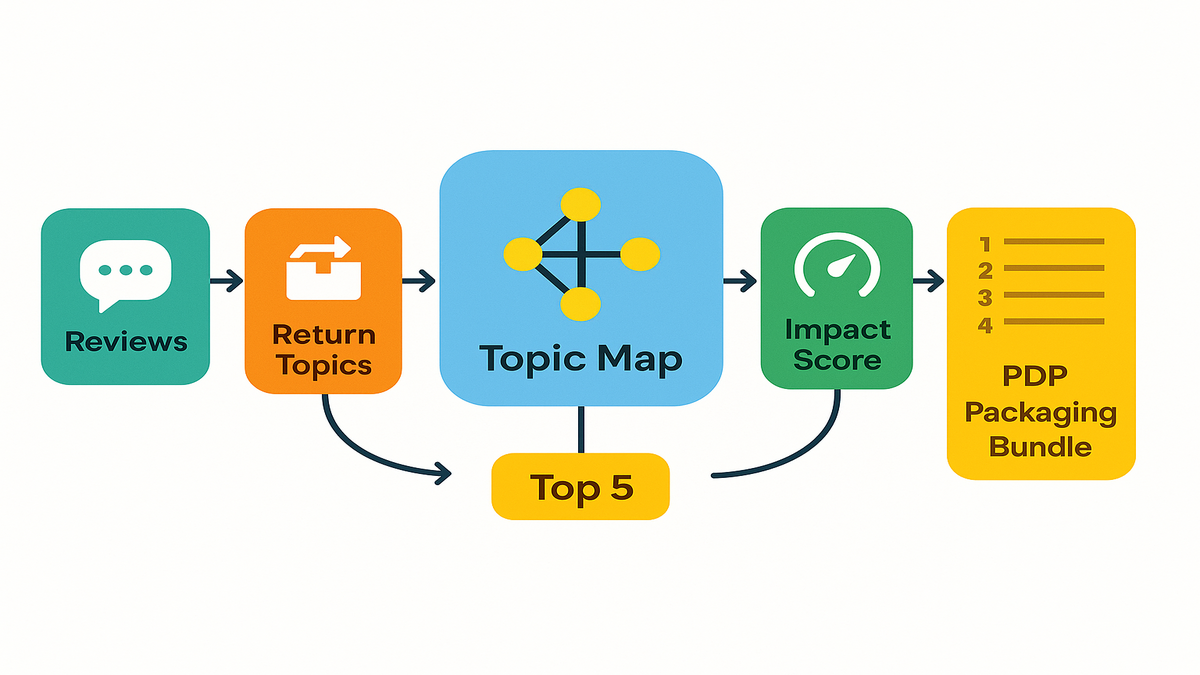

Pair customerReviews with customerReturnTopics to link what customers say with what they do. Use this to improve PDPs, packaging, and instructions, lifting conversion while cutting returns.

TLDR

- Join reviews and return topics by ASIN or SKU, in weekly time buckets.

- Build a topic map, and score impact (frequency x sentiment intensity x margin hit). Fix the top issues, then measure for 4 to 6 weeks.

- Watch for sampling bias, vague reason codes, language mix, and privacy.

What the two signals give

- customerReviews: structured review text, ratings, timestamps, and verified purchase flags. Use it for sentiment and theme extraction across your reviews.

- customerReturnTopics: grouped reasons customers return items, by product and time. Use it to validate which themes actually drive real behavior.

4 step playbook

1 Build a topic map

- Start with 10 to 20 seed topics to cover common pain points. Think fit, sizing, color accuracy, install, compatibility, and durability. Also noise, battery, packaging damage, odor, defect, and missing parts. Plus warranty, shipping damage, and instructions to round the list out.

- For each topic, gather evidence from reviews (snippets, counts, sentiment) and returns (topic counts or rates). End with a clear proposed action per topic, no hand-waving.

2 Score impact and prioritize

- Frequency: mentions per 1,000 orders across reviews and returns.

- Sentiment intensity: weight strongly negative content more than mild negative.

- Margin hit: approximate dollars at risk per occurrence (low, medium, high or a numeric factor).

- Impact score = normalized frequency x sentiment intensity x margin hit. Then rank everything and pick the top 5 to tackle.

3 Ship fixes

- PDP copy: clarify materials, sizing, compatibility, what is in the box, care or usage tips.

- Visuals: true to life images, context shots, scale references, short setup or demo video.

- Packaging or BOM: better protection, labeled parts, sturdier components, simplified assembly.

- Bundles: include obvious adapters or accessories to match shopper expectations.

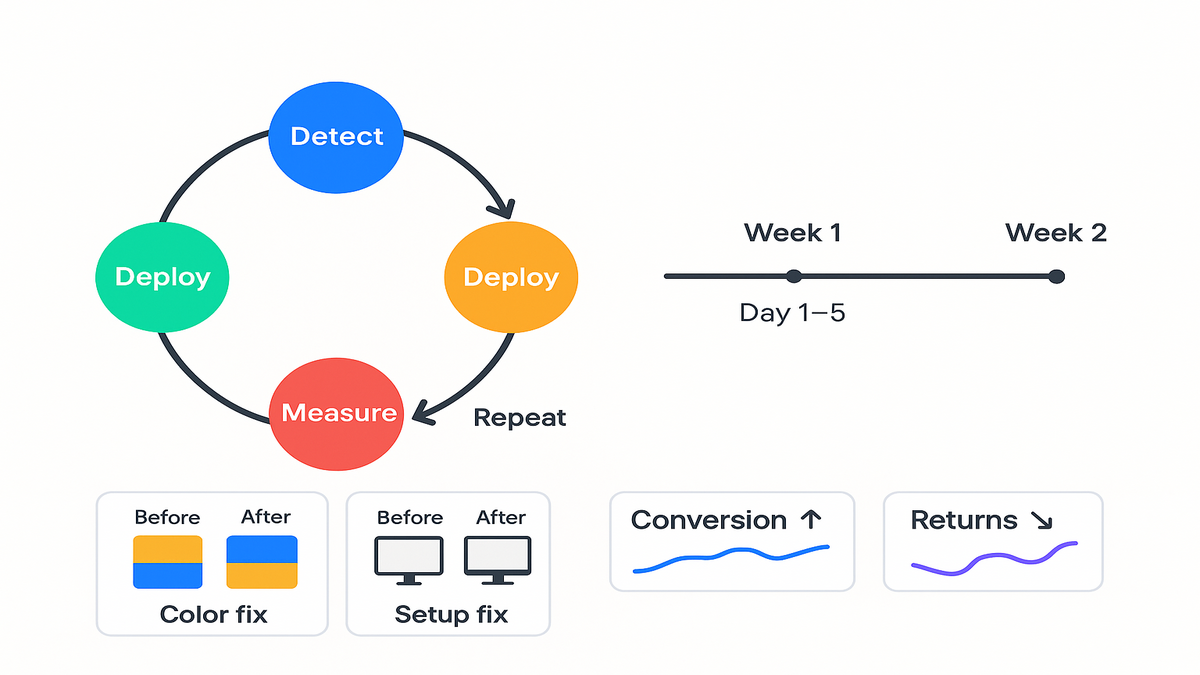

4 Measure 4 to 6

- Track conversion rate, return rate, average rating, and share of 1 to 2 star reviews mentioning top topics.

- Monitor topic drift: top issues should decline and rotate as fixes land.

Lightweight workflow notebook friendly

- Ingest: load reviews and return topics; standardize ASIN or SKU; round timestamps to weekly buckets.

- Features: fast sentiment (e.g., VADER for English or another lightweight model), simple keyword flags, per topic counts.

- Join: aggregate by ASIN week; compute average sentiment, negative share, return rate.

- Visualize: scatter plot average sentiment vs return rate to find outliers; table of top impact topics.

Impact scoring tips

- Normalize everything to a common time window and order volume.

- Weight verified purchase reviews slightly higher than non verified ones.

- Use bins for margin hit if exact costs are unknown; consistent bins are good enough to rank.

Guardrails

- Sampling bias: reviews skew extreme; balance with rolling medians and verified purchase weighting.

- Reason code ambiguity: confirm with real text snippets; avoid over interpreting broad codes like not as described.

- Language handling: detect language and route to appropriate lexicons or models; keep an unknown bucket rather than forcing poor translations.

- Privacy: aggregate at SKU or category and week; avoid user level data and scrub PII from snippets.

One week plan

- Day 1: Load data, standardize IDs and timestamps, choose a 6 to 12 week lookback, define weekly buckets.

- Day 2: Run sentiment and keyword flags; build first topic map; sanity check with 5 known SKUs.

- Day 3: Add margin hit bins; compute impact scores; select top 5 issues.

- Day 4: Draft PDP copy changes, request new images or video, open packaging tickets, assign owners.

- Day 5: Ship easy wins (copy and images), schedule packaging changes, set baselines and a simple weekly report.

- Week 2: Review movement, scale successful fixes to sibling SKUs, refine misses.

Example fixes with fast payback

- Color mismatch: replace studio-only photos with daylight shots for shoppers. Add a note on finish or fabric variation. Expect fewer not as described returns going forward on these items overall.

- Install confusion: add a 45 second setup video via QR and a checklist card. Expect fewer returns labeled could not install by your customers.

- Sizing issues: add a size chart with exact measurements and clear callouts. Call out runs small or large; reduce fit returns overall.

- Fragile packaging: add corner protection and a do not cut deep message. That should lower damage on arrival across the inbound journey.

- Compatibility gaps: place a bold compatibility matrix near the buy box. Prevent missing or wrong accessory returns with that upfront guidance.

Metrics to track

- Conversion rate by SKU and traffic source if available today.

- Return rate by topic cluster and SKU over each week.

- Review mix: share of 1 to 2 star reviews mentioning your top issues.

- Time to impact: watch leading indicators within 2 weeks for copy and image changes. Packaging may lag longer, so set expectations with your team.

Bottom line

You do not need heavy ML for this work either. A tight loop of detect, decide, deploy, measure, and repeat is enough. It turns customerReviews and customerReturnTopics into fewer returns, stronger PDPs, and more revenue.