Boost AI Accuracy 66% With Bedrock Reinforcement Fine-Tuning

You don’t need a bigger model; you need a smarter one instead.

AWS just rolled out reinforcement fine-tuning (RFT) in Amazon Bedrock. Early customers report 66% average accuracy gains, without switching to giant, pricey LLMs.

That’s not a rounding error; that’s a real strategy shift.

If you’re duct-taping prompts, templates, and guardrails for better answers, wrong game. RFT teaches models the behavior you actually want, using feedback, not only data. So they reason better, handle complex workflows, and stay on-brand.

And no, you don’t need an RL PhD to pull this off. Bedrock automates the heavy lifting, from data prep to training to evaluation. You define what 'good' means; Bedrock turns it into rewards the model chases.

Salesforce reported gains up to 73% with this approach. Other early adopters build faster, more accurate agents, without torching their cloud budgets.

Ready to stop scaling tokens and start scaling outcomes? Here’s how to put RFT to work.

TLDR

- RFT in Amazon Bedrock boosts accuracy by about 66% on average.

- You fine-tune behavior with rewards, human or automated, not just data.

- Start with Amazon Nova 2 Lite; text-only works now, multimodal support is coming.

- Start small with 100–200 examples, then iterate on your reward design.

- Watch for overfitting; monitor reward plateaus closely, and adjust as needed.

- Pair this with SageMaker serverless customization for end-to-end agility.

Flips the agent playbook

Cost accuracy trap

Today you have two dials: bigger model, bigger bill. Or smaller model, weaker results, especially on hard tasks.

Neither gives controlled behavior for complex workflows like reasoning steps, policy rules, or domain tone.

RFT changes the objective completely, away from blind next-token prediction. Instead of only predicting the next token, you optimize for rewarded behaviors. Think chain-of-thought quality, grounded citations, and clean, structured outputs.

AWS sums it up neatly:

\"RFT is a technique where models are trained with feedback signals, such as human or automated evaluations, that reward desirable behaviors and penalize undesirable ones.\"

In practice, RFT lets you say, 'Don’t just answer; use this style, structure, and rules.' It turns a clever intern into a seasoned analyst who cites sources and follows policy.

RFT breaks the spiral where performance issues push you toward bigger base models. Instead of buying more parameters, you pay for better behavior shaping. That shift moves teams from okay demos to reliable production agents, without blowing budgets.

Better process better answers

- Average accuracy gains of 66% over base models, according to AWS.

- Salesforce reports improvements up to 73% when tuned to its requirements.

- You can use invocation logs to teach how your best agents perform.

Put simply, you’re not paying for more parameters; you’re paying for better process. That pays off for support bots, policy-heavy workflows, and analyst copilots needing transparency.

And the kicker is that these improvements compound over time, dramatically. Once your agent follows rubrics and formats, you can layer more complex tasks. Think compliance checks, cited summaries, or multi-tool reasoning, without rewriting your stack.

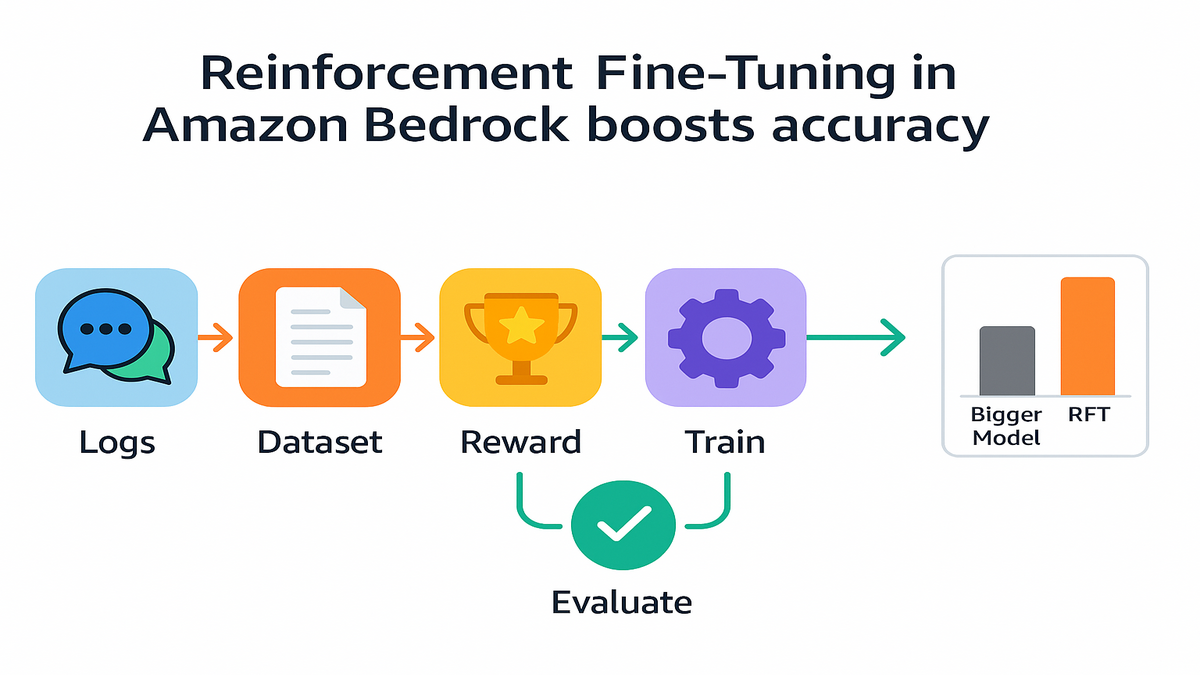

How RFT works

Start with Nova 2 Lite

Pick a Bedrock foundation model, initially Amazon Nova 2 Lite, text-only for now. Then feed it the right signals and curated examples for training.

- Invocation logs, like chat histories and API traces, that show good behavior.

- A curated dataset of prompts paired with desired outcomes for each prompt.

Bedrock automates the RL pipeline, with no custom infra and no bespoke trainers. You focus entirely on the outcome you care about.

Behind the scenes, Bedrock handles the heavy lifting automatically for you. It batches data, applies rewards, runs training loops, and tracks metrics. You don’t write PPO from scratch or spin up big GPU fleets. You define 'good,' then measure progress against that target.

Teach with rewards

Define success with a reward function, rule-based, AI-based, or using templates. AWS puts it plainly:

\"Developers only need to define what 'good results' look like through a reward function, which can be rule-based, AI-based, or use pre-defined templates.\"

Practical reward ideas:

- Award points for grounded answers that cite retrieved sources.

- Penalize hallucinations or clear policy violations, every single time.

- Reward step-by-step reasoning for complex, multi-part questions.

- Score schema compliance, including JSON shape and required field constraints.

Then iterate with discipline using short, measured feedback loops. Monitor reward curves, validation accuracy, and common failure modes. If rewards plateau or overfit, tweak the function or widen the dataset. The loop is simple: define, train, evaluate, then refine.

Want a lightweight way to instrument evaluation and mine invocation logs for rewards? Explore Requery to speed evaluation and reward design in practice.

Data prep that saves

- Curate examples with clear prompts, expected outputs, and short rationales. One great example beats ten noisy ones every single time.

- Redact PII and secrets before they reach training data. Keep a clean, auditable pipeline from end to end.

- Include hard negatives, tricky prompts with wrong outputs, plus a corrected gold answer.

- Cover edge cases: long documents, policy traps, multilingual inputs, or JSON-heavy tasks.

- Deduplicate near-identical examples carefully to avoid harmful memorization.

Pro tip: tag each example with metadata like domain, intent, and difficulty. During evaluation you’ll see exactly where the model improved or regressed, then target rewards.

Reward design patterns

- Rule-based: deterministic checks like JSON schema, length, keywords, and citation URLs.

- Model-graded: a grader LLM scores helpfulness, grounding, or tone using a simple rubric.

- Hybrid: rules for structure, model grading for nuance, plus small human spot checks.

- Pairwise preference: pick the better of two outputs to nudge preferred behavior.

Keep rewards sparse and meaningful, not bloated and confusing. A simple +1 for verified sources often beats a 17-point rubric.

Evaluate without fooling yourself

- Create a held-out test set that the model never sees during training.

- Mix exact-match checks, like key fields, with semantic scoring using an evaluator model.

- Track accuracy and compliance together: are answers correct, safe, and properly formatted?

- Watch for regressions; gains on Policy Q&A shouldn’t break Billing or Troubleshooting.

If you can, run a small canary in production behind a flag. Real users are ruthless and expose issues your test set misses.

Real world wins

Salesforce big lift

Salesforce saw gains up to 73% by tailoring RFT to specific requirements. Think domain lingo, high accuracy, and strong safety controls. That’s the blueprint: tune for your own KPIs, not generic benchmarks.

\"These enhancements allow customers to achieve better model performance without requiring larger, more expensive models.\" —AWS

It’s the classic enterprise story with policies, legacy workflows, and a high trust bar. RFT encodes those realities into incentives, so the model stops guessing and behaves.

Weni VTEX specialized agents

Weni by VTEX used RFT to build specialized agents for unique business needs. Translation: fewer escalations, faster resolutions, and tighter, cleaner workflows. You don’t need a moonshot, just a narrow, valuable task where behavior compounds.

Copyable patterns:

- Customer support: reward grounded answers that include correct policy citations.

- Sales enablement: reward tone control paired with strict product accuracy.

- Research copilots: reward structured reasoning with clear, verified source links.

If you have high-volume agent interactions, your invocation logs are an RFT dataset waiting.

And don’t sleep on ops metrics that actually pay the bills. When answers are consistent and structured, downstream systems become far more reliable. That means less human cleanup and faster time to resolution.

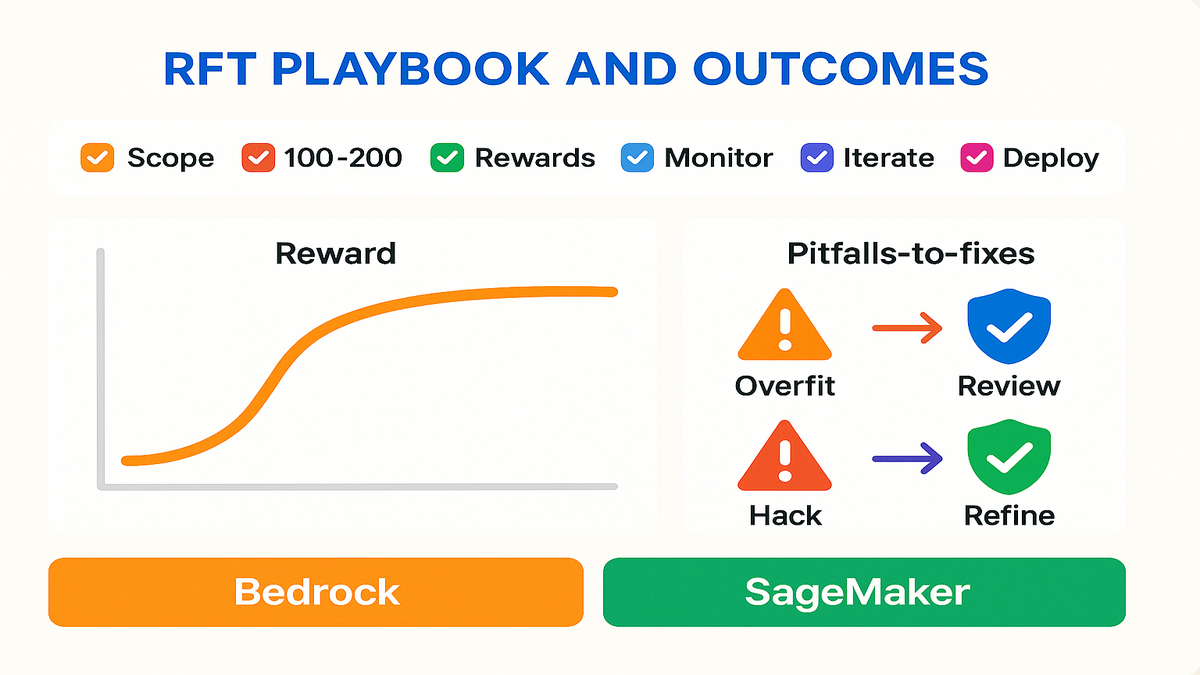

Zero to pilot playbook

Scope to win early

AWS recommends starting small:

\"Start small with 100–200 examples to validate reward functions.\"

Pick one workflow with clear success criteria, like grounded answers in 3–5 sentences. Include a reference link and JSON metadata in the target format. Build a minimal reward: +1 grounded reference, +1 schema, −1 policy violations.

Pre-training sanity check:

- If the base model already aces your reward, skip RFT for now.

- If it struggles consistently, proceed to RFT and proper evaluation.

Also define a north-star KPI before training starts, so success is clear. Examples include CSAT up 5 points, deflection up 10%, or handle time down 15%. You can also use accuracy on a curated test set, up 20%. Tie RFT outcomes to business outcomes so the win is obvious.

Train monitor iterate

Run RFT in Bedrock with your reward function configured. Then watch for the following common issues during training.

- Overfitting: the model memorizes examples, and generalization drops sharply.

- Reward hacking: outputs meet rules technically but miss the actual intent.

- Plateauing rewards: little measurable improvement after a certain number of steps.

AWS guidance says, 'Monitor training progress to detect overfitting or plateauing rewards' early. Also, 'Optimize reward functions for efficiency to minimize overhead' during experiments. Small, well-shaped rewards beat sprawling rulebooks every day. Tighten the loop; don’t bloat it with unnecessary checks.

When you hit a plateau, change one thing at a time. Add 20–50 harder examples, simplify rewards, or rebalance weights toward truthfulness. Then retrain and re-evaluate with the same held-out test set.

Lightweight evaluation rubric

- Grounding: does the answer cite a source from the retrieval set? (+1)

- Format: valid JSON with required fields, and no extra keys. (+1)

- Safety: no restricted topics, and no PII echo or leakage. (+1)

- Helpfulness: concise, on-topic, and within 3–5 sentences total. (+1)

- Penalty: hallucinated fact, broken link, or policy violation. (−1)

Keep it simple with a rubric anyone understands in sixty seconds. You should be able to audit it fast when things go sideways.

Quick pulse check

- RFT in Amazon Bedrock lets you optimize behavior, not just tokens.

- Average 66% accuracy lift across tasks; Salesforce saw gains up to 73%.

- Start with Nova 2 Lite for text-only; more models and modalities are coming.

- Use invocation logs for training; keep rewards simple, consistent, and auditable.

- Monitor for overfitting and reward hacking closely; iterate fast.

- Pair with SageMaker serverless customization for end-to-end agility.

Next multimodal models stacks

Near term support

Initial RFT support is Amazon Nova 2 Lite with text-only input. AWS says multimodal support and more model types are coming soon. Expect vision plus text agents tuned for parsing documents, retail catalog QA, or forms. Rewards can measure OCR fidelity, entity extraction quality, or caption grounding accuracy.

That matters because many real-world tasks are not purely text. With multimodal RFT, you shape reasoning across formats, not just final words.

Stack play Bedrock SageMaker

AWS upgraded SageMaker with serverless model customization that complements Bedrock RFT nicely. Prototype RFT quickly in Bedrock, then use SageMaker to scale and version. You can also combine techniques like LoRA fine-tuning plus RFT for domain agents.

This shows AWS doubling down on custom LLMs that fit guardrails and budgets. If your roadmap says efficient and reliable agents, RFT becomes your center of gravity.

Also remember the rest of the Bedrock toolbox, like Knowledge Bases and Guardrails. Use built-in model evaluation, too, for clean comparisons and tracking. RFT aligns the model to your end-to-end workflow, not a toy benchmark.

RFT questions answered

RFT vs fine tuning

Standard fine-tuning predicts the right output for each input using supervision. RFT optimizes behavior using rewards, human or automated, across steps and outcomes. It shines on reasoning and policy-heavy workflows where process actually matters.

How many examples

No. AWS recommends starting with 100–200 examples to validate your reward design. You can scale later, but small, high-quality sets can drive big gains. The reward function does most of the steering during learning.

Supported models now

Right now, Bedrock RFT supports Amazon Nova 2 Lite with text-only inputs. AWS plans to expand support to additional models and multimodal data types.

Prevent reward hacking

Keep rewards simple, auditable, and aligned with human judgment at evaluation time. Mix rule-based checks like schema and citations with small human spot reviews. Watch validation tasks and adjust when outputs meet the letter, not the spirit.

Use invocation logs

Yes, Bedrock supports using invocation logs from real agents as training data. Clean for privacy, define rewards around your best exemplars, and iterate.

SageMaker fit

SageMaker serverless customization complements Bedrock RFT in real deployments. Prototype behavior tuning in Bedrock, then operationalize and scale in SageMaker. Use it to manage experiments and larger training flows within MLOps.

Measure success beyond accuracy

Track operations and business metrics like CSAT, handle time, and deflection rate. Also track first-contact resolution, compliance violations per thousand, and JSON parse errors. If accuracy rises but handle time spikes, weight rewards toward concision and structure.

RLHF DPO preference tuning

They’re cousins sharing similar ideas, but with different training methods. RLHF uses human feedback; DPO optimizes from pairwise preference comparisons. Bedrock RFT focuses on reward-driven behavior shaping for specific workflow needs.

Safety and compliance

Bake safety into rewards by penalizing violations and requiring approved citations. Add small human review slices for high-risk intents and sensitive scenarios. Pair RFT with Bedrock Guardrails to enforce boundaries at runtime.

Time to results

For a narrow task with 100–200 examples, gains can show within few iterations. The slow part is designing a reward that captures what 'good' means. Invest there first, then training feels surprisingly quick.

RFT RAG Guardrails combo

- Retrieval: treat correct citation of retrieved passages as a reward signal. Penalize answers that ignore top documents or invent facts outright.

- Reasoning: reward step-by-step plans on complex tasks, but cap length to avoid rambling.

- Guardrails: enforce policy and tone at runtime, and train with same rules.

- Schema-first outputs: reward correct JSON keys and types to keep systems stable.

Add a periodic retrieval audit to your process every sprint. If the retriever surfaces bad docs, the model cannot ground answers. Fix upstream data first before blaming the agent downstream.

Cost ops governance

- Cost control: RFT lets you keep smaller base models and still improve outcomes. You trade raw scale for smart alignment and targeted rewards.

- Serverless FT: Bedrock and SageMaker serverless features simplify infrastructure and management. You pay for what you use and skip cluster babysitting.

- Versioning: treat rewards, datasets, and models as first-class, versioned artifacts. Track changes like code with reward v1.2, dataset v3.1, model v0.9.

- Reproducibility: log seeds, configs, and evaluation scores for explainable changes. Auditors and product teams will ask for clear histories later.

- Rollout: ship behind a feature flag, run A/B tests, collect feedback as signals.

A tiny governance win: publish a one-page Reward Design Doc for each run. Define behavior, risks, and success measures clearly, in plain language. Your future self will seriously thank you later.

Common pitfalls to dodge

- Over-penalizing length: you get terse, unhelpful answers; balance brevity with completeness.

- Rewarding the wrong proxy: the model optimizes metrics, not intent. Keep rewards tightly tied to user value and desired outcomes.

- Ignoring edge cases: the model aces happy paths and fails on real tickets. Seed examples directly from production pain points and recent incidents.

- Skipping human review: automated graders drift over time without warning. Add regular spot checks to keep the reward signal honest.

- Training on messy logs: garbage in means garbage out, every time. Clean, redact, and label before you feed the beast anything.

When in doubt, simplify. Shorter rewards, tighter datasets, faster loops.

First week plan

- Day 1: pick one narrow workflow and write a crisp success definition. Then draft a simple five-point reward rubric to start.

- Day 2: pull 150–200 examples from logs; clean, redact, and label carefully. Add twenty hard negatives with clear corrections for contrast.

- Day 3: run a base model baseline and score it with your rubric. If performance is already good, pause and reconsider the plan.

- Day 4: kick off RFT in Bedrock; track reward curves and validation carefully.

- Day 5: review failure modes, tweak reward weights, and add twenty tricky examples.

- Day 6–7: rerun training, ship a canary behind a flag, collect feedback. Use it for the next iteration loop immediately.

Move fast, but measure. A small, repeatable loop beats a perfect plan. Ship something useful instead of chasing imaginary perfection forever.

Ship RFT checklist

- Pick one narrow task with clear, testable success criteria.

- Gather 100–200 high-quality examples or cleaned invocation logs.

- Define a simple reward covering grounding, schema, and policy adherence.

- Run RFT on Amazon Nova 2 Lite inside Bedrock.

- Evaluate on held-out tasks; watch for overfitting and plateaus carefully.

- Refine rewards; add light human review exactly where it counts.

- Deploy behind a feature flag and measure downstream KPIs like CSAT, AHT, accuracy.

- Document reward logic for governance, auditability, and repeatability later.

RFT isn’t about chasing bigger models; it’s about shaping smarter ones. With Bedrock, you can tune behaviors that move accuracy, trust, and resolution time. And you can do it without setting your cloud bill on fire.

Start small, reward what matters most, and iterate fast. Winners will not have the most parameters; they’ll have the best rewards.

Want to see how teams ship measurable gains with behavior tuning? Browse our Case Studies.

References

- Amazon Bedrock overview

- What is Reinforcement Learning? (AWS)

- Amazon SageMaker overview

- Amazon Bedrock model evaluation (Docs)

- Amazon Bedrock model customization (Docs)

- Guardrails for Amazon Bedrock (Blog)

- Amazon SageMaker serverless inference (Docs)

\"You don’t reduce hallucinations by yelling at the prompt. You reduce hallucinations by paying the model to tell the truth.\"