Migrate Faster with Bedrock’s OpenAI-Compatible Open Weights Today

You’ve probably built half your stack around the OpenAI API. Smart move. But now your CFO wants lower costs, your CISO wants tighter control, and your team wants real fine‑tuning. Enter Amazon Bedrock’s new open‑weight models, wired for OpenAI API compatibility. So you can switch providers without paying the rewrite tax.

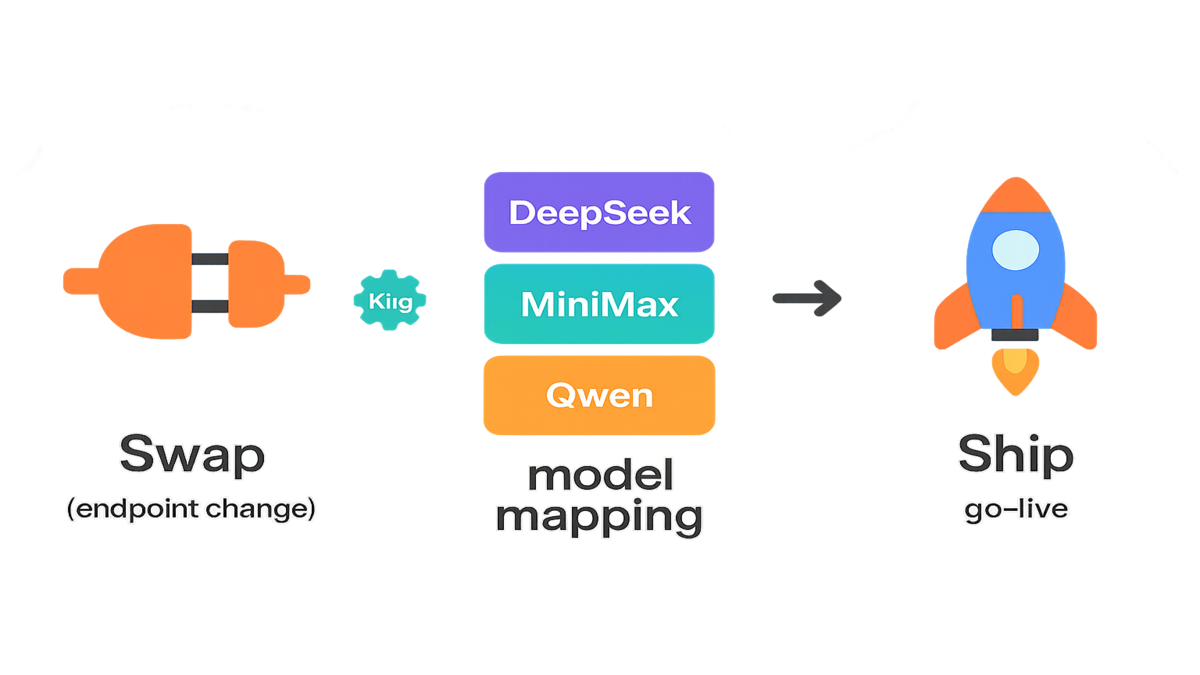

Here’s the punchline: you keep your agent workflows, chat flows, and codegen routines. You swap endpoints, map model names, and ship. Bedrock’s serverless layer handles scaling, security, and model customization for you. You get enterprise guardrails and freedom to fine‑tune on your own data.

The newest wave—DeepSeek v3.2, MiniMax 2.1, and Qwen3 Coder Next—lands with OpenAI spec alignment. Add Kiro, a spec‑driven AI dev tool, and you’re shipping in days. Not months. It’s the move if you want multi‑model choice without chaos.

If you’ve been Googling “aws bedrock openai” or “bedrock openai models,” this is your green light. Let’s make the migration boring, in the best way, and the results loud.

TLDR

- New open‑weight models on Amazon Bedrock (via Project Mantle) match the OpenAI API spec.

- Lineup: DeepSeek v3.2, MiniMax 2.1, and Qwen3 Coder Next for agents, chat, and codegen.

- Use Kiro to spec your flows and auto‑generate integration glue.

- Bedrock is serverless, secure, and fine‑tune‑ready on your proprietary data.

- Reinforcement‑style tuning works via external pipelines plus Bedrock Custom Models.

Open weights that speak OpenAI

Why open weight matters now

Open‑weight models give you transparency and control without starting from scratch. You can inspect behavior, attach adapters, and fine‑tune on your data. On Bedrock, you also get managed infra: autoscaling, IAM‑backed access, private VPC links, and enterprise guardrails. Translation: fewer fire drills, more speed.

- Control: Bring your own adapters and domain data with Bedrock Custom Models.

- Safety: Centralize content filters and red‑teaming with Bedrock Guardrails.

- Governance: Use account‑level policies and logging (CloudWatch, CloudTrail) like your AWS stack.

Open weights also help with repeatability. You can log which base model, adapter, dataset slice, and hyperparameters made a release. That means tight versioning across dev, staging, and prod. You can roll forward or back without guesswork.

Open weights also lower vendor risk in practice. If you need to switch providers or run hybrid, you’re not stuck. It’s like open file formats—you keep your work and your options.

OpenAI API compatibility

OpenAI’s request and response shape became the default standard. Bedrock’s new open‑weight models match that spec, so migration gets simple. Change your base URL, map model IDs, confirm function‑calling JSON schema, then test tool outputs. Most teams keep prompts and business logic as‑is.

Example: you’ve got a code‑assist agent using gpt‑4 function calling today. With OpenAI‑compatible models in Bedrock, you map to the provider’s tool schema. You keep your function signatures, and the agent keeps humming. Now you also get Bedrock’s serverless autoscaling.

As one engineering lead said during a dry run, “We didn’t change our product. We changed our dependency.” Exactly the point.

Compatibility checklist

- Roles and messages: system, user, assistant map cleanly.

- Tools and function calling: JSON schema aligns; add strict validation for shape drift.

- Streaming: token events stream in order; confirm event names and delimiters.

- Parameters: temperature, top‑p, and max tokens behave similar; confirm defaults.

- Errors: map error codes and retry policies; don’t assume 1:1 parity.

Meet the lineup

Quick model snapshots

- DeepSeek v3.2: Generalist with strong reasoning and long‑context skills. Great for agent orchestration, multi‑step planning, and retrieval‑aided decisions. From the DeepSeek family known for efficient training.

- MiniMax 2.1: Chat‑first behavior, crisp instruction following, and quick turns. Ideal for customer bots and ticket triage. MiniMax focuses on dialog alignment; see the MiniMax API for more.

- Qwen3 Coder Next: Code‑gen specialist from the Qwen lineage. Strong on multi‑file edits, refactors, and test creation. Nice for IDE plugins, CI autofixes, and review assistants.

These arrive under Project Mantle with OpenAI spec alignment. That means less glue code, fewer surprises, and faster QA cycles.

Picking the right fit

- Agents and tools: Start with DeepSeek v3.2 for planning. If latency rules, try MiniMax 2.1.

- Pure chat and support: MiniMax 2.1 likely wins on tone and safety defaults.

- Codegen and repair: Qwen3 Coder Next is your pick. Measure diff quality and compile rates.

Pro tip: run a week‑long A/B with real traffic. Evaluate win rates on user‑labeled outcomes, not only BLEU or code metrics. Keep a rollback switch to your prior model behind a feature flag. That’s how you move fast, not reckless.

Evaluation plan

- Offline: Use a frozen test set of real chats, tasks, or code diffs. Score exact‑match where possible and use human review where needed.

- Shadow: Send mirrored traffic to candidates without action. Just log and compare.

- Online: Route a small slice, like 5–10%, with a kill switch. Track time‑to‑first‑token, total latency, and outcomes like FCR, CSAT, and compile success.

- Budget: Cap token spend per route. Stop early if errors spike or costs jump.

Migration playbook

Step 1 Map the API

- Chat completions: Align to the OpenAI API request and response shape. That’s messages, roles, and system prompts.

- Function calling and tools: Ensure your tool schema is pure JSON and matches tool‑use format. Validate with a strict JSON schema checker to block silent coercions.

- Streaming: Verify token streaming formats. Update your SSE or WebSocket client if event names differ.

Kiro makes this easier. You define a spec—models, tools, safety rails—and Kiro generates glue. That includes env configs, model mapping, and schema validators. Your app logic stays untouched.

Bonus hygiene

- Normalize tokenization edge cases with your own truncation rules.

- Standardize error translation so downstream services keep familiar codes.

- Document model and route ownership. Someone owns each critical path, period.

Step 2 Stabilize prompts

System prompts are your policy engine. Freeze them first. Run a prompt regression suite across your top workflows. Pick 30–50 prompts that cover 80% of traffic. Lock baselines before switching endpoints.

Guardrails live at two levels: prompt rules and platform filters. Use Bedrock Guardrails for safety, PII handling, and jailbreak resistance. Keep brand tone and legal disclaimers inside your system prompts.

Small wins add up:

- Split long system prompts into short, reusable blocks. Version everything.

- Add unit tests for critical phrases, like disclaimers or source cites.

- Track prompt token counts. Keep headroom for long user messages.

Step 3 Validate tools

Your agent is only as good as its tool success rate. Spin up a canary that runs full end‑to‑end flows. Cover tool calls, error handling, and retries. Log tool payloads and model outputs side‑by‑side for triage. Expect small shifts in function selection choices.

Example scenario: a support bot picks between “RefundPolicyLookup” and “EscalateToHuman.” After migration, the escalation threshold shifts a bit. Add a temporary confidence band and a human‑in‑the‑loop rule on day one. Remove it once your metrics settle.

Step 4 Observability quotas SLOs

- Quotas: Check throughput and token quotas before go‑live. Coordinate bumps early, please.

- Telemetry: Emit structured logs for prompts, tool calls, model IDs, latency, and cost per request.

- SLOs: Define p95 latency and success targets. Alert on real issues, not noise.

Step 5 Rollout safely

- Start with internal traffic or low‑risk routes first.

- Use weighted routing with a hard off‑switch.

- Keep the prior provider hot for one full release cycle. Don’t pop champagne early.

Step 6 Post migration tuning

- Tighten prompts after seeing real data in production.

- Adjust temperature, top‑p, and tool‑selection hints.

- Trim prompt bloat and add RAG for repeating facts. Saves tokens and hallucinations.

Enterprise mode

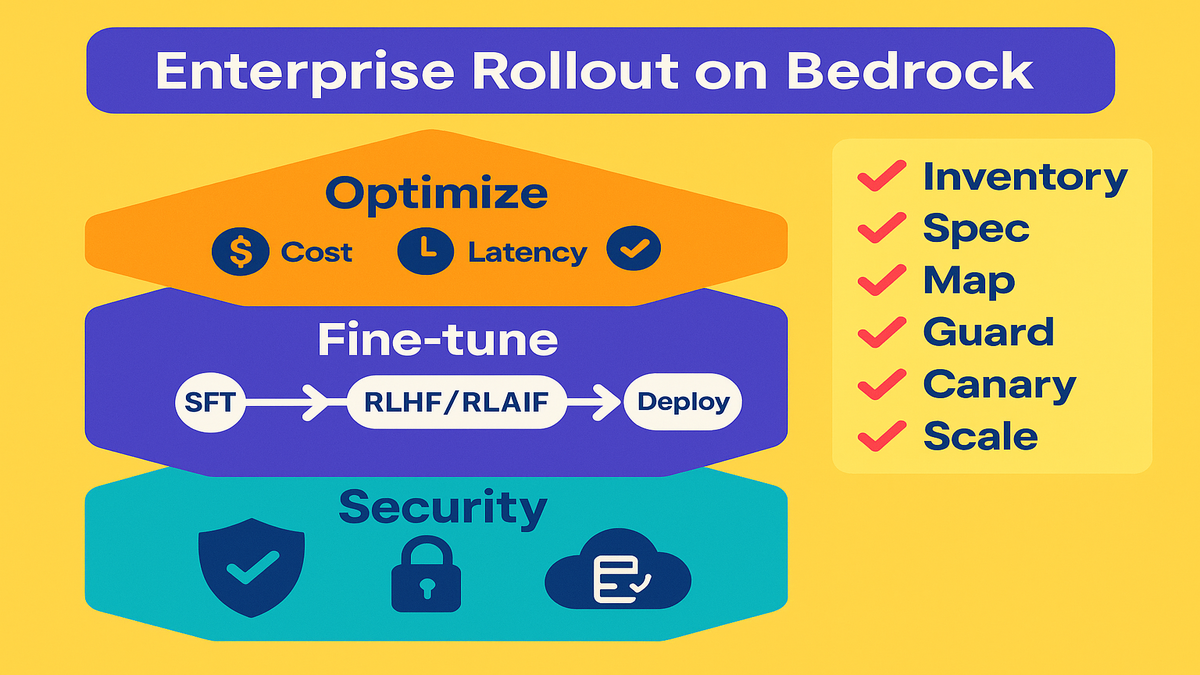

Security and scaling

Bedrock is fully managed and serverless. You get autoscaling, private networking with VPC endpoints, IAM policies, and audit trails via CloudWatch and CloudTrail. That’s the boring infra you want. More in the Bedrock Developer Guide and the model catalog.

Content filters, sensitive data handling, and safety categories can be centralized with Guardrails for Bedrock. Tie that into DLP so you’re not rebuilding compliance every quarter.

Security checklist

- Enforce IAM least privilege for any app calling Bedrock.

- Route traffic via VPC endpoints and restrict egress where needed.

- Centralize logs and access reviews with your SIEM and audit cycles.

Fine tuning SFT later

“Reinforcement fine‑tuning in Amazon Bedrock” lands in two parts:

- Supervised fine‑tuning (SFT): Bedrock supports customization for select models via Custom Models. You upload curated input and output pairs, then train adapters without touching base weights.

- Reinforcement signals (RLHF or RLAIF): Run reward modeling and policy optimization outside, like on SageMaker or your infra. Then import adapters or checkpoints through Custom Models. See the paper “Training language models to follow instructions with human feedback” (arXiv:2203.02155).

Workflow: start with SFT on your gold chats or code diffs. Layer a reward model tuned to business outcomes, like first‑contact resolution or compile success. Use offline evaluation to catch regressions. When stable, deploy on Bedrock and gate with Guardrails.

Data tips for fine tune

- Curate for clarity: one task per example and consistent style.

- Label edge cases and failures, like ambiguous inputs or policy checks.

- Keep a holdout set for unbiased evaluation later.

- Version datasets, adapters, and prompts together.

Cost and performance levers

- Token budgets: Keep system prompts short, consistent, and modular. Cache snippets.

- Retrieval: Offload facts to RAG to shrink prompts and cut hallucinations.

- Observability: Log per‑route model choice, latency, and success labels. That’s your loop.

- Streaming: Show first tokens fast for speed feel. Users forgive longer tails.

- Batching: Where latency allows, batch low‑priority work to smooth spikes.

- Timeouts and retries: Fail fast on tool errors. Don’t snowball costs with retries.

- Determinism: Lower temperature for workflows needing consistent outputs.

Reality check

Model catalog changes

If you’re searching for “aws bedrock models list” or “ministral 3b 8b and 14b models available on amazon bedrock,” remember the catalog evolves. Always verify models and regions in the Bedrock Model Catalog. Don’t hardcode assumptions—feature‑flag your model IDs by default.

Note: Bedrock already includes many families, like Amazon, Anthropic, Cohere, Meta, Mistral, and more. The new open‑weight adds—DeepSeek v3.2, MiniMax 2.1, Qwen3 Coder Next—arrive via Project Mantle with OpenAI compatibility. Region availability can vary, so double‑check.

Latency context and tools

Expect small differences in a few areas.

- Latency: cold starts versus hot paths. Warmups really help.

- Context: max tokens and truncation rules. Log and enforce your own caps.

- Tool selection: heuristics differ slightly. Add guardrails and retries as needed.

Run synthetic suites that mimic real users. Include ambiguous prompts, malformed inputs, and adversarial queries. It’s cheaper to catch weirdness pre‑prod than explain it on Monday.

Compliance and data boundaries

If you have strict data boundaries, keep traffic inside your VPC. Control egress and route logs to your SIEM. For broader controls, start with AWS Compliance and map to your internal risk register. Bring security into prompt‑policy talks early. Prompts are policy, not just words.

Quotas errors throughput

- Quotas: each model has default service quotas. Confirm token and TPS limits before load tests.

- Error taxonomy: error codes won’t always match your prior provider. Translate at the edge.

- Backpressure: add circuit breakers and graceful degradation. Fallback to faster or cheaper models.

Speed run recap

- Open‑weight models on Bedrock now match the OpenAI API spec. So you can migrate with minimal code changes.

- DeepSeek v3.2 for reasoning, MiniMax 2.1 for chat, and Qwen3 Coder Next for codegen cover key workloads.

- Kiro turns your spec into working integrations, mapping models, tools, and guardrails.

- Bedrock gives you serverless scale, IAM, VPC, and guardrails. You bring your data and adapters.

- SFT is native. Reinforcement‑style tuning runs externally, then deploy via Custom Models.

FAQ

OpenAI API compatible meaning

It means request and response structures match the OpenAI spec, including chat roles, tools, and streaming. In practice, you swap the base URL and model ID, validate tool schemas, and your app logic still works.

Fine tune in Bedrock

Bedrock supports supervised fine‑tuning for select models through Custom Models. For reinforcement‑style tuning, like RLHF or RLAIF, you train externally, then import adapters or checkpoints via Custom Models.

Safety and compliance

Centralize safety at two layers. Use platform‑level Guardrails for PII, toxicity, and jailbreak resistance. Keep brand tone and disclaimers in prompts. Lock down IAM and use VPC endpoints. Log everything for audit and fast rollback.

Test function calling

Build a canary that runs full end‑to‑end flows with real tools. Validate JSON with a strict schema and compare tool choices before and after. Widen confidence bands temporarily. Track success by outcomes, like ticket resolved or code compiles.

Check model availability

Always check the Amazon Bedrock Model Catalog. Availability changes over time and by region. Avoid hardcoding any assumptions.

Model availability accuracy

Model names and sizes vary by provider and evolve. Confirm current Mistral or Mixtral offerings in the Bedrock Model Catalog. Then pick by region and your use case.

RAG and embeddings

Usually no. Your retrieval pipeline—index, embeddings, reranker—can stay. Start by swapping the generator only. If you change embeddings later, do it as a separate project.

Auto model routing

Yes. Build a simple router that picks a model by task, like chat or code. Keep it transparent, logged, and overridable with a feature flag.

Estimate cost

Sample real traffic, like 1–5%, for a few days. Log input and output tokens, latency, and outcomes. Extrapolate by route. Set budgets and guardrails before full rollout.

Zero downtime checklist

- Inventory: list endpoints, model IDs, and functions you use today.

- Spec: define tool schemas and system prompts. Freeze a prompt regression suite.

- Map: swap base URL and model ID. Align streaming and tool formats.

- Guard: configure Bedrock Guardrails. Set IAM policies and VPC endpoints.

- Canary: run end‑to‑end flows. Watch latency, costs, and outcome metrics.

- Scale: roll out with feature flags. Keep rollback hot for one release cycle.

- Tune: start SFT with gold data. Plan RLHF or RLAIF externally. Deploy via Custom Models.

- Quotas: confirm service quotas and request increases if needed.

- Docs: record model versions, prompt hashes, and config diffs for audit.

You’re not switching paradigms—you’re switching platforms. Keep your business logic. Level up the control plane.

In short: you get the compatibility you need today and the flexibility you’ll need tomorrow. Open weights give you control, Bedrock gives you the rails, and Kiro gives you speed. Migrate once, then iterate without fear. The teams that win in 2026 won’t be the ones with the flashiest demos. They’ll be the ones shipping stable, compliant, multi‑model systems on schedule.

History rhymes in AI: standards win. OpenAI’s spec became the lingua franca; Bedrock speaking it means you can change engines without rebuilding the plane.

Want to see how teams execute migrations and scale multi‑model systems in production? Browse our Case Studies.

Ready to operationalize multi‑model workflows with guardrails, testing, and reporting? Explore our platform Features.

References

- Amazon Bedrock — What is Amazon Bedrock?:

- Amazon Bedrock — Model Catalog:

- Amazon Bedrock — Custom Models (model customization & fine‑tuning):

- Amazon Bedrock — Guardrails:

- Amazon Bedrock — Service Quotas:

- AWS Compliance Programs:

- OpenAI API Reference:

- DeepSeek (GitHub organization):

- Qwen (Alibaba Cloud’s Qwen models, GitHub):

- MiniMax API Guides:

- Ouyang et al., “Training language models to follow instructions with human feedback” (RLHF):