AWS re:Invent 2025 Keynote Recap With On‑Demand

You blinked, and AWS shipped a quarter of momentum in just five days. Good news: you don’t need a Vegas badge or 40,000 steps to catch up. Even better: the top bits are already on replay and easy to watch. If you care about AI that ships, sturdy infra, and faster teams, this week’s a cheat code.

re:Invent 2025 wasn’t a vibe—it was an actual roadmap, folks. From AI-first launches to boring yet critical plumbing, the theme stayed simple and sharp: build more, break less, scale smarter. Bonus: keynote replays and deep dives are live, so you can binge the backlog at 1.25x.

If you missed Matt Garman on stage or skipped the aws keynote stream, you’re not behind—you’re right on time. This recap distills what truly matters and where to point your team next. Think of it as your aws reinvent re:cap, minus the desert dryness and plus the links you’ll actually click.

One favor: read this with a pen, not just a scroll. Circle one AI workflow and two infra tweaks you can ship within 30 days. That’s it, nothing fancy or heroic this time for real. The goal isn’t to admire the roadmap—it’s to pick lanes, set metrics, and move. Keep it reversible, keep it measurable, and keep it boring in the best way.

TLDR

- Keynotes and technical sessions are on-demand—watch anywhere, anytime, including YouTube replays.

- AI took center stage, but core services got serious, practical upgrades.

- Real-world examples: Adobe’s AI for digital experiences; Writer using Amazon Bedrock for secure gen AI.

- Hands-on wins: EC2 stuck-instance termination, Auto Scaling + Lambda hooks, cleaner Amazon Connect UI.

- Use this month to pilot one AI workflow and ship two infra improvements.

What Dropped at reInvent 2025

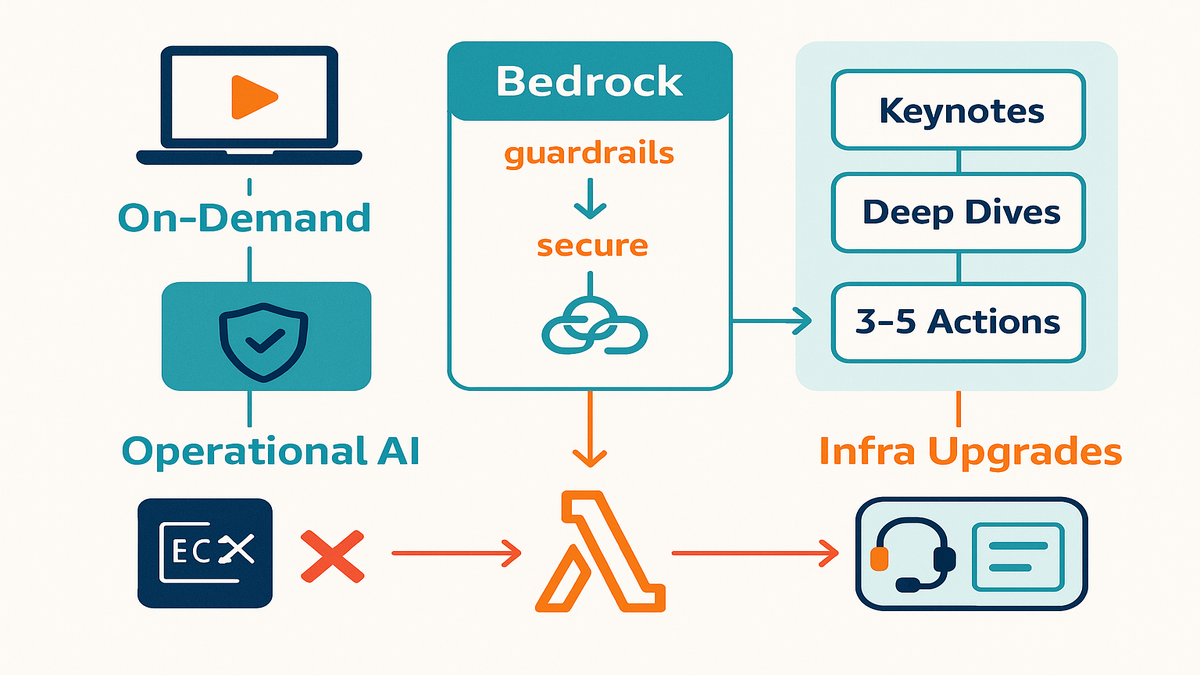

Operational AI was the plot

You saw the hype, loud and everywhere, but here’s the actual substance. The biggest stories were generative AI that fits real stacks—governed, integrated, and cost-aware. Case in point: Writer integrated Amazon Bedrock for secure gen AI workflows without data leaks or duct-taped governance. Adobe also showed how it’s using AI for digital experiences at scale, with privacy still tight.

The shift isn’t more models, it’s more usable models that teams can trust. For you, that means guardrailed endpoints, traceable prompts, and connectors into services you already run.

Operational AI in practice

- Guardrails: add safety filters and PII controls around prompts and outputs to keep brand and data clean. Amazon Bedrock’s guardrails and access controls exist for exactly this use.

- Observability: log prompts, model choices, and outputs with request IDs for later audits. Keep a paper trail in CloudWatch and attach metadata like use case, role, and risk.

- Knowledge grounding: cut hallucinations by grounding responses in approved data via retrieval or knowledge bases. Keep sources versioned and reviewable for anyone who needs to check them.

- Change management: treat prompts and policies like code, not vibes or guesses. Use pull requests, versioning, and rollbacks with clear diffs and owners for changes.

Why this matters: AI pilots fail when they look cool but remain ungoverned. They succeed when they’re boring, traceable, and wired into current workflows your teams trust.

Foundational services became multipliers

Keynotes grabbed headlines, but the infrastructure updates will hit you next sprint. You can now force-terminate EC2 instances stuck in shutting-down, which saves painful hours. EC2 Auto Scaling lifecycle hooks can trigger AWS Lambda during launch and terminate events. Translation: warm caches, pre-baked agent configs, and cleaner blue/green cutovers without manual glue.

If you measure the week by what ships faster on Monday, this is it. AI is exciting, but these operational primitives feel like real freedom.

First-hand example: an enterprise contact center used the updated Amazon Connect UI to cut agent onboarding and transfers. Nothing fancy here, just better defaults and fewer clicks that actually count. That’s the pattern: small changes that compound into real outcomes over time.

Plug this in immediately

- Blue/green deploys: on terminate, drain load balancers, archive logs to S3, and deregister targets cleanly. On launch, pre-warm app caches and fetch config from Parameter Store.

- Cost control: stop stuck capacity from blocking scale-in and delaying your cleanup. Right-size sooner and pay less for idle, wasted compute time.

- Reliability: set sensible lifecycle hook timeouts and heartbeats to avoid orphaned instances during spikes.

Pro tip: practice your scale-in path off-peak using synthetic load that mimics traffic. You’ll find the sharp edges before customers do, and save yourself late-night pain.

On Demand Deep Dives

Keynotes on replay

Couldn’t catch every room in Vegas this week? No problem at all. The aws reinvent keynote 2025 sessions are already on-demand and easy to stream. You get strategy from AWS leaders plus customer stories that map to real roadmaps. Rewatch segments, drop timestamps to your team, and build a standards doc from source. Search “aws reinvent keynote recap on demand videos youtube” to find the official AWSEvents channel. This channel hosts highlight reels and session replays you can snack on.

This is the moment to binge wisely, not everything, just what matters. Start with keynotes tied to your lane: data and AI, infra, or app velocity. Pull three to five actionable notes, not interesting ideas you’ll forget next week.

Make the most of replays

- Watch at 1.25x with captions on, and pause on architecture and pricing slides.

- Drop exact timestamps in your team doc, so notes become real plans. Turn each into a ticket with an owner and a clear due date.

- If you lead a team, assign a session per person, then run a quick teach-back. Thirty minutes is enough time, and everyone learns twice by teaching.

Hands on sessions and labs

Beyond the big stage, the technical deep dives are the real gold here. You’ll find sessions on governed gen AI apps, hybrid data pipelines, and tuned autoscaling. No badge is required, just click and learn at your pace and rhythm. Bookmark the stuff you’d attend live workshops for, like demos and reference architectures. Example repos are often linked, which makes practice so much easier.

Pro tip: watch at 1.25x with a doc open beside the video. Capture service names, config flags, and pricing gotchas before you forget them. Treat it like a free internal training program, not random background noise.

A simple learning loop

- Pick one session that maps to a pilot you can build within one week.

- Write a ten-bullet summary and one ‘how we’ll test this’ section.

- Build the tiniest version, measure it, then scale or archive in your learnings log.

Practical Upgrades Today

EC2 zombie instance drama

Stuck shutting-down instances are the worst kind of broken, neither alive nor dead. They also block deploys and stall teams when you need speed most. The new ability to force-terminate them lets you unstick autoscaling without a ticket storm. Small feature, huge relief for operations during messy rollouts or late-night incidents.

How to trial it safely

- Identify a non-critical Auto Scaling group and turn on detailed logging for tests.

- Simulate a bad rollout by launching a batch, then issuing scale-in under light load.

- Practice termination paths with lifecycle hooks, so game day won’t surprise you.

What to measure

- Time from ‘scale-in requested’ to ‘capacity stable’ across your environments.

- Number of manual interventions per deploy you needed to recover.

- Error rate during cutover windows when teams are deploying changes.

Auto Scaling Lambda hooks

Trigger Lambda on instance lifecycle events to prime instances and manage targets cleanly. Sanitize logs, warm caches, and stop relying on flaky bash scripts in userdata. You’ll also cut mean-time-to-stable after scale events that hit hard.

First-hand example: blue/green teams use a terminate hook to drain connections cleanly. They also archive logs to S3 before instances disappear, avoiding ghost 500s entirely.

Quick implementation pattern

- Create a lifecycle hook on your Auto Scaling group for launch and terminate transitions.

- Wire the hook to an SNS topic or EventBridge rule that triggers Lambda.

- In Lambda, perform idempotent tasks like register targets, prime caches, and push logs. Include deregistration with load balancers and send structured logs for better analysis.

- Set heartbeat timeouts long enough for slow drains, without stalling scale-in entirely. Keep a retry path in case something fails unexpectedly during transitions.

Amazon Connect cleaner UI

The Contact Control Panel and workflow builder are simpler and easier to use now. That sounds cosmetic, but it means faster agent ramp, fewer misroutes, and happier supervisors. If you own CX metrics, this upgrade is low effort and very measurable.

Run a quick pilot

- Choose one queue with frequent transfers or long handle times to start.

- Move only that flow to the new UI and train a small set.

- Track average handle time, first-call resolution, and supervisor escalations for two weeks.

Data stack DocumentDB and Lambda

Better DocumentDB and Lambda support lets serverless apps push more logic near data. That means less glue code, calmer pipelines, and easier rollbacks with decoupled handlers.

Where it shines

- Event-driven updates: react to data changes, update a search index, and notify downstream services without a queue hairball.

- Safer migrations: keep writes simple and push transformations into versioned Lambdas for fast rollback.

Collaboration Clean Rooms Entity Resolution

With event publishing to EventBridge and better fuzzy matching, you can collaborate safely. Analysts get more accurate joins across partners, and security teams finally sleep.

Practical next step

Pilot one clean-room collaboration with a partner on a narrow dataset. Measure lift, not novelty, so you can justify scaling with real numbers.

If you’re evaluating privacy-safe marketing analytics, explore AMC Cloud and Requery. They help operationalize Amazon Marketing Cloud clean-room workflows without heavy lift.

Governance checklist for collaborations

- Define inputs and outputs in plain language everyone understands upfront. What fields go in, and what aggregates should come out exactly? Document it clearly before anyone runs queries or shares results.

- Set data retention windows and deletion policies before you start any collaboration.

- Log every query with a purpose and an owner attached to it. Treat this like change control for analytics, not a casual playground.

From Hype to Roadmap

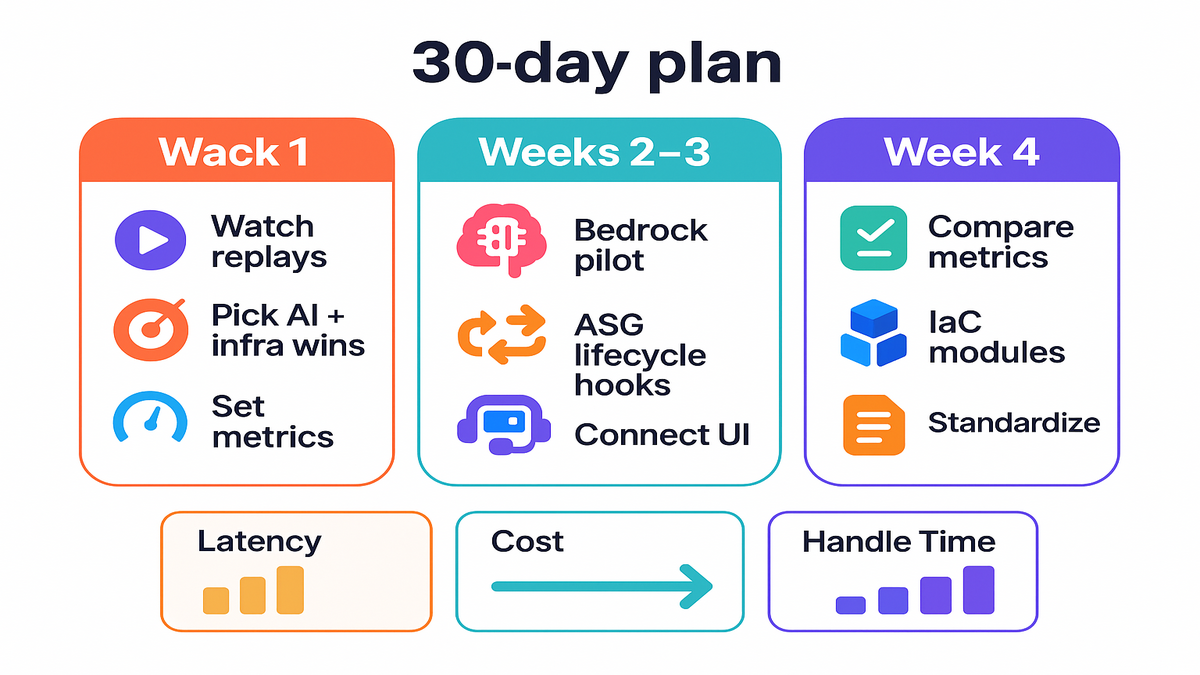

Week 1 watch decide narrow

- Watch the aws reinvent live stream replays, keynotes plus two relevant 300-level sessions.

- Pick one AI use case and two infra wins that meet clear criteria.

- Criteria should be measurable, reversible, and clearly customer-facing for your team.

- Draft success metrics like latency, cost per request, and agent handle time.

- Write a one-page plan with problem, approach, risks, and rollback steps.

- Share it quickly for feedback, so you can refine without delay.

Weeks 2 3 build smallest

- Pilot a Bedrock-powered workflow for one internal process, like support summarization.

- Implement EC2 lifecycle hooks with Lambda in one sandboxed Auto Scaling group.

- Migrate one queue or routing flow to the new Amazon Connect UI.

- Bake in logging and dashboards from day one, so week four isn’t guesswork.

Week 4 prove or pause

- Compare metrics to baseline and keep what truly moves the needle. Kill the rest quickly, so resources return to higher-impact work.

- Turn pilot IaC into reusable modules that your platform team maintains.

- Write the ‘we standardized this’ page your team will actually use.

- Present a ten-minute readout with screenshots and three next steps.

Risk guardrails

- Have a clear kill switch and rollback plan for every single pilot.

- Gate external access and keep early AI pilots on internal or masked data.

- Cap spend with budgets and alerts, because surprise bills kill momentum.

Quick Pulse Check

- You’re not late at all, on-demand videos make this the perfect catch-up window.

- AI mattered, but quiet infrastructure upgrades will save you hours every week.

- Real customers like Adobe and Writer showed secure, governed generative AI in action.

- Start with one AI workflow and two infra wins, then measure impact in 30 days.

- Use lifecycle hooks with Lambda and the new Connect UI for immediate ROI.

If you only do one thing this week, pick a target metric and align a pilot. Think latency, AHT, or cost per request, then schedule the demo. Momentum beats perfect, every single time, especially in busy seasons.

FAQ reInvent Questions

1 Where find keynote replays

Head to the official re:Invent site for on-demand content across tracks and levels. Find highlights and session uploads on the AWSEvents YouTube channel by searching those phrases.

2 Fastest way to results

Pick a single AI pilot, like summarizing support tickets with Bedrock, and two operational upgrades. Choose EC2 lifecycle hooks with Lambda and the Amazon Connect UI to start. Timebox the work to four weeks and define success metrics up front.

3 Amazon Connect updates matter

Yes, they matter, and the gains show up quickly in your metrics. The simplified Contact Control Panel and workflow builder reduce clicks and training time. They also reduce misroutes, so AHT and CSAT should improve with little risk.

4 Auto Scaling lifecycle hooks

They let you run code when instances launch or terminate, automatically and safely. You can warm caches, register or deregister targets, drain connections, and ship logs. That means fewer flaky deploys and much more predictable scale events overall.

5 Secure generative AI path

That’s the Amazon Bedrock promise, with guardrails and access controls built in. Customers like Writer shared governed gen AI workflows that keep enterprise data safe.

6 Learn without traveling Vegas

Absolutely, you can learn everything without stepping on a plane this year. The full aws reinvent re:cap is online, including keynotes, chalk talks, and deep dives. Watch on your schedule, share timestamps, and turn notes into actionable tickets.

7 AI costs under control

Scope your pilot to one high-value workflow, and cap usage with budgets and alerts. Log model calls with simple per-request metrics and clear tags for tracking. Start with smaller context windows, and scale up only if accuracy demands it.

8 Lifecycle hooks gotchas

Two common ones are missing heartbeats and writing non-idempotent cleanup code. Missed heartbeats make hooks time out early, which breaks your flow. Non-idempotent code causes double drains or deletes, so keep operations idempotent. Also set sensible timeouts that match your slowest drains in production.

9 What data is safe

Start with non-production data or masked datasets that remove sensitive information. Avoid customer identifiers until guardrails, access controls, and security sign-off exist.

10 Custom RAG or managed base

If speed and governance matter, start with managed services to test value quickly. Go custom only after you hit clear limits that justify the extra work.

11 Measure AI workflow impact

Keep it boring and clear with time saved, error rate, and user satisfaction. If it saves minutes and reduces edits, you’re absolutely on the right track.

Ship the Wins

- Identify your top customer friction and one operational bottleneck right now.

- Watch two relevant on-demand sessions and capture concrete configs and options.

- Stand up a Bedrock prototype focused on one clear, measurable metric.

- Add lifecycle hooks with Lambda to a non-critical Auto Scaling group.

- Pilot the new Amazon Connect UI on a limited, well-chosen queue.

- Review results with stakeholders and document what should standardize next.

- Promote the working bits to IaC modules, and sunset the rest.

Pro tips to keep momentum

- Name owners and deadlines on every experiment, because accountability is a feature.

- Share short demos early, since a three-minute Loom beats a long doc.

- Celebrate kill decisions too, because stopping the wrong thing saves the right thing.

In the end, re:Invent is only as good as what you ship next. The 2025 story is clear: governable AI, quiet infrastructure, and respectful collaboration. You don’t need a full rewrite, just a few upgrades that compound steadily. Watch the right sessions and steal patterns, then turn them into customer wins. Do two things your customers feel this quarter, and earn real advantage.

For real outcomes and playbooks you can borrow, check out our Case Studies.

References

- AWS re:Invent official site (on-demand content)

- AWSEvents YouTube channel (session replays/highlights)

- Amazon Bedrock service overview

- Amazon Bedrock Guardrails

- Knowledge Bases for Amazon Bedrock

- EC2 Auto Scaling lifecycle hooks documentation

- Amazon EC2 instance lifecycle (reference)

- Amazon Connect release notes

- AWS Clean Rooms product page

- AWS Entity Resolution product page

- Amazon EventBridge service overview

- Amazon DocumentDB (with MongoDB compatibility)

- AWS Pricing Calculator

- AWS Well-Architected Framework

- Amazon CloudWatch (logs and metrics)