Ship Secure Multi-Tenant SaaS Faster With Lambda Isolation

You know that gut drop when one tenant can peek at another’s data? In multi-tenant SaaS, that’s the nightmare. The quick “fix” is a stack per tenant, usually. You pay the tax in cost, chaos, and ops pain.

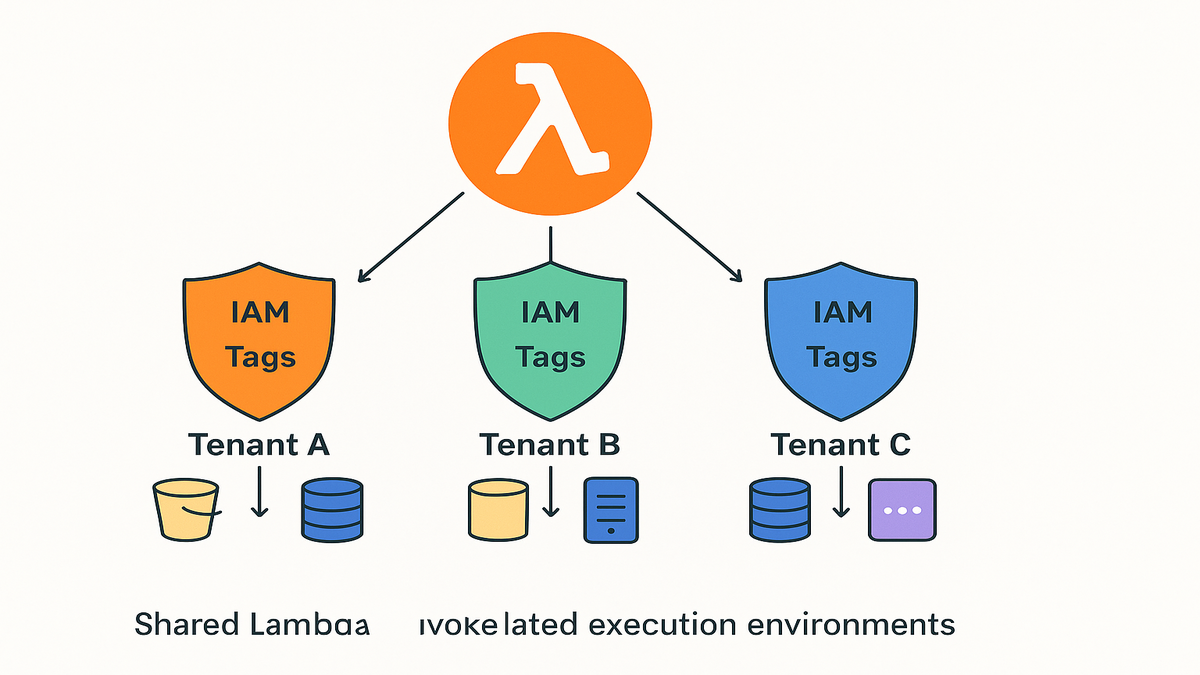

Here’s the plot twist: AWS Lambda just added Tenant Isolation Mode. You run one function. Each tenant gets a sealed runtime, scoped by IAM, tags, and context. You keep the speed of shared infra without the shared blast radius.

Translation: you can design a multi-tenant app that checks SOC 2, ISO 27001, and CFO boxes. No rats’ nest of per-tenant code paths or forks.

If you waited for clean, scalable isolation that won’t kneecap dev speed or unit costs, this is it. Let’s break how it works, what to watch, and how to ship it this week.

If that sounds like magic, it’s three boring but strong ideas working together: IAM perms, resource tags, and tenant-aware runtime context. You stop trusting app code to “remember” isolation and hand that job to the control plane. Best part: you can adopt it slowly. Enable it for one flow, prove it with tests and dashboards, then roll it across your stack.

TL;DR

- Lambda Tenant Isolation Mode gives each tenant its own execution isolation inside one function.

- Isolation is enforced with IAM roles, ABAC-style tagging, and tenant-aware runtime context.

- You get fewer stacks to manage, lower cost, and stronger security boundaries.

- Works for SaaS, internal multi-tenant platforms, and regulated workloads.

- Start by enabling the mode, tagging resources by tenant, and wiring IAM to those tags.

What Isolation Mode Does

Compute and context isolation

Tenant Isolation Mode spins up isolated runtimes per tenant while you use a single Lambda. Each call carries tenant context—think tenantId, plan tier, and routing hints. Your code decides what to touch and what to skip. You write shared business logic once, while the platform enforces isolation.

Under the hood, the runtime gets tenant-aware attributes on every call. You don’t hardcode ARNs. You derive them from tenantId. Even if a dev points at the wrong prefix or table, IAM says “nope” before the request leaves. Your code stays simple—just business logic—while the platform acts like your bouncer.

IAM and ABAC control

You scope each tenant to its own IAM role and use ABAC. Roles and policies reference tags like TenantId=1234. Even if two tenants hit the same Lambda, the caller can’t read or write without matching tags. The boundary is not your code—it’s IAM.

In practice, attach a TenantId tag to the principal via role tags or session tags. Require a matching TenantId tag on all resources too. Policies check aws:PrincipalTag/TenantId against aws:ResourceTag/TenantId. Add deny-by-default, and you get tight least privilege. Use permission boundaries so dev roles can’t escape ABAC rules. Use SCPs to require tags across the org.

Resource tagging done right

Tag everything that moves: S3 buckets and objects, DB rows or parts, queues, topics, even logs. The rule: no tag, no touch. Standardize a tag key like aws:ResourceTag/TenantId and enforce it in CI/CD. The upside is cleaner audits, safer refactors, and faster tenant onboarding.

First-hand example: You’ve got a billing function. Tenant A calls it and the runtime injects TenantId=A. IAM only allows access to resources tagged TenantId=A. If your code points at Tenant B’s S3 prefix, the request dies with AccessDenied. That’s real isolation.

Common footguns to avoid:

- Don’t rely only on bucket tags; tag S3 objects too, since checks hit object tags.

- Enforce tags at deploy time. Pipelines should fail if required tags are missing.

- Standardize casing and spelling. TenantId is not tenantID.

Impact and audits

Security that auditors like

SaaS audits boil down to proof. With Tenant Isolation Mode, you show tag-led scoping, per-tenant roles, and deny-by-default policies. “Security is job zero” isn’t fluff here—it’s hard lines enforced by the control plane. That reduces cross-tenant risk and shortens audit cycles.

You also get clean evidence: IAM policies with condition keys, logs with tenantId fields, and AccessDenied events for cross-tenant attempts. Pair with change control—PRs and ticket numbers in commits—and you walk into audits with receipts, not excuses.

Fewer stacks, fewer headaches

Running one function for 500 tenants beats 500 functions. Fewer deploy units equals fewer things to break. Devs maintain shared code once, while policy and data paths stay split. Rollouts are simpler, flags are cleaner, and incidents have a smaller, traceable blast radius.

It lowers brain load too. New hires learn one pipeline, not a maze of forks. Troubleshooting gets repeatable. With consistent tags, you can query the org fast. “Show all resources for Tenant 1234,” and you get the list right away.

Cost math that makes sense

More tenants, same function. Warm paths, shared cache, fewer idle bits. You pay for calls and duration, not hundreds of dormant copies. Since you tag everything, showback or chargeback per tenant is easy. Great for unit economics and pricing talks.

First-hand example: A data analytics SaaS merges 120 per-tenant Lambdas into one function. It uses Tenant Isolation Mode. Result: one pipeline to deploy, 120 IAM roles with tag filters, and a dashboard by tenant ID. Ops time drops, and on-call stops hating Fridays.

If you want more, reserve extra concurrency or memory for premium tenants. Keep one codebase but tune runtime settings or upstream throttles. VIPs get steadier latency. The books stay clear because everything is tagged.

Blueprint Enable Tag Lock

Enable Tenant Isolation Mode

Flip it on in the console or CLI for your function. The runtime starts receiving tenant-aware context. Your code uses that context to pick tenant resources, like the right schema or S3 prefix.

Roll it out slow. Start with a low-risk path like reports or exports. Validate with negative tests. Cross-tenant attempts should fail. Use dashboards so per-tenant metrics light up. Then expand to hot paths.

Tag and standardize

Pick a canonical tag key: TenantId. Apply it to S3 prefixes, DB parts, queues, and log groups. Bake tag checks into CI/CD so untagged resources can’t ship.

Use org guardrails. Tag Policies can require tags and allowed values. Combine with AWS Config rules or conformance packs to flag missing TenantId. Goal is simple: missing tags never hit prod.

IAM policy pattern ABAC

Here’s a minimalist ABAC policy sketch you can adapt: [policy example omitted]

For reference, this style of policy is common:

Attach the TenantId as a role tag or pass it as a session tag when assuming the role. Validate with the IAM policy simulator and Access Analyzer.

Wire the runtime context

Your handler reads tenant context from the event or auth claims. Avoid any hardcoded names. A simple pattern:

- Use tenantId to compute S3 key prefixes: s3://myapp-data/tenantId=1234/…

- Use tenantId as the first DynamoDB partition key token.

- Pass tenantId along headers for downstream services and logs.

First-hand example: Ingest API gets tenantId from the auth token. It sets it in logger context and builds resource ARNs on the fly. If a dev tests a cross-tenant call, IAM blocks it. Safety rails by default.

Add language helpers. Wrap S3, DynamoDB, and queue clients with a tiny helper. It takes tenantId and returns a pre-scoped client. Every call comes from context, not constants.

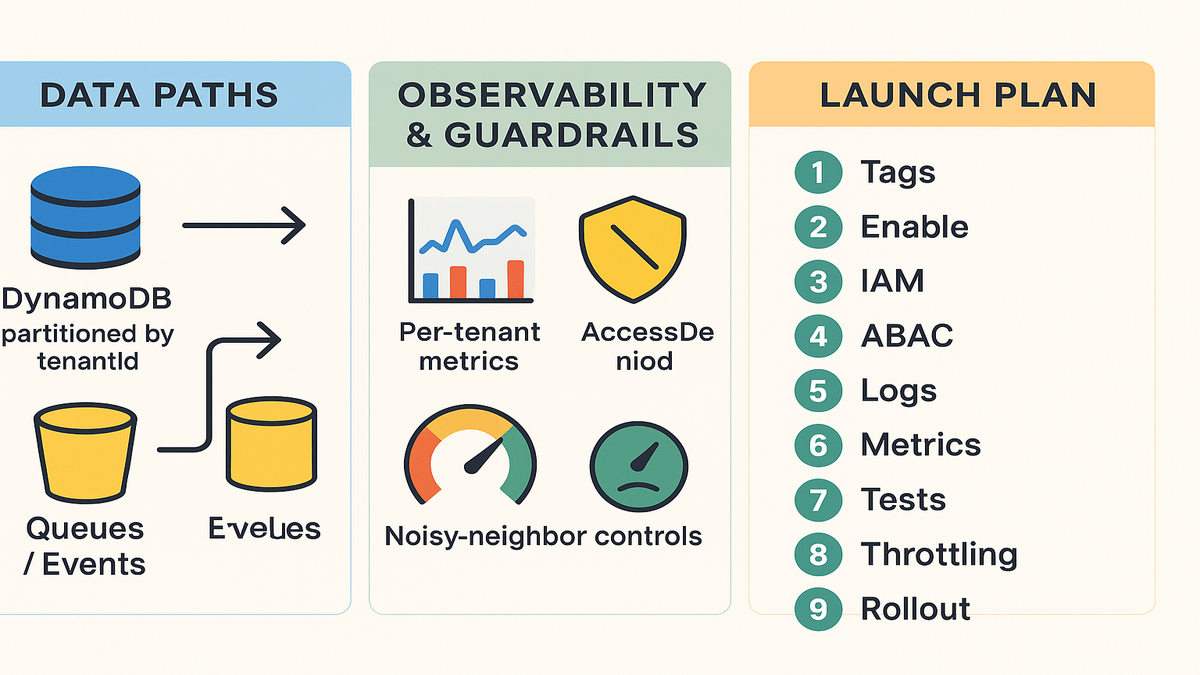

Architecting Data Paths

Databases isolation layer

For DynamoDB, a common path is one table with tenantId as the partition key. Add IAM conditions on dynamodb:LeadingKeys so callers only touch their partition. For Aurora, use one schema per tenant or row-level security. Add tenantId columns and route by tags from the app layer.

DynamoDB tips:

- Put tenantId first in the key to enable IAM leading-key checks.

- Add a GSI by (tenantId, type) for fast per-tenant queries.

- Use TTL for auto-expiring per-tenant temp data.

Aurora tips:

- Postgres row-level security can enforce tenantId rules in the database.

- Separate schemas per tenant help with noisy neighbors. Mind connections though. Use pooling and short transactions.

S3 prefixes and object tags

Create a standard prefix: s3://bucket/data/tenantId=XYZ/. Tag all objects with TenantId=XYZ. Enforce IAM on s3:ExistingObjectTag/TenantId. Lifecycle rules can be tenant-scoped too. Great for plan-based data retention.

If you need client-side access, issue short-lived STS creds with a token vending pattern. Tenants upload to their own prefixes without your servers proxying bytes.

Gotchas:

- Enforce object tags at write time with a bucket policy. Require x-amz-tagging with TenantId.

- Use separate KMS keys or grants for extra isolation on premium tenants. You can tag keys too and condition on them.

Asynchronous flows

Make queues and topics multi-tenant by tagging resources and using message attributes. For EventBridge, use tenantId for routing. Enforce perms with resource tags and input transformers. Each consumer Lambda runs under the tenant’s role, keeping reads and writes scoped.

First-hand example: A file import flow drops raw objects into s3://ingest/tenantId=ABC/. EventBridge routes a message with tenantId=ABC to one normalizing Lambda. That Lambda, running with TenantId=ABC, can read ABC’s sources and write to ABC’s targets. Same code path, no leaks.

Add dead-letter queues per tenant if you want tight retries and alerts. Tag the DLQs so only the right tools can read them. For high-volume tenants, shard queues by tenantId for steady throughput.

Observability and Testing

Per-tenant telemetry

Instrument logs and metrics with tenantId. In CloudWatch Logs, include a field tenantId. In metrics, use tenantId as a dimension for latency, errors, and cost per tenant. For tracing, set tenantId in X-Ray annotations so you can filter by tenant. This proves isolation and speeds up debug.

Use the Embedded Metric Format for logs and add a tenantId dimension. Build per-tenant dashboards: p50 and p95 latency, error rates, cold starts, and cost per action. If an audit asks for Tenant 9’s access last week, filter by tag instantly.

Guardrails and tests

Write a negative test. Try to access Tenant B resources while under Tenant A’s role. Expect AccessDenied, every time. Also test missing tags. Unlabeled resources should fail and block in pipelines. Add a canary that simulates cross-tenant access to catch drift.

Automate policy checks with Access Analyzer and unit tests on policy docs. Run the IAM policy simulator in CI. Add AWS Config rules so untagged resources ping an alert and a ticket within minutes.

Performance isolation

Track cold starts and p95 latency per tenant. If a noisy neighbor spikes, use concurrency and throttles to cap impact. You can route premium tenants to higher memory or separate compute parts, while keeping shared logic.

Tune cold starts with provisioned concurrency or smart language picks. For Java, SnapStart can help a lot. For bursty tenants, front the function with a queue to smooth spikes. Always watch per-tenant metrics so you catch regressions early.

First-hand example: During load test, one tenant floods the import endpoint. Because concurrency and queues are tagged and IAM-scoped, others keep running fine. You see the spike on per-tenant dashboards and throttle that tenant at the edge.

Halfway Check

- You’ve enabled Tenant Isolation Mode and standardized a TenantId tag.

- IAM policies use ABAC so principals only touch resources with matching tags.

- Data paths are tenant-aware: DB keys, S3 prefixes, and message attrs include tenantId.

- Logs, metrics, and traces carry tenantId for visibility and auditability.

- Negative tests prove cross-tenant attempts fail with AccessDenied.

If any of these feel shaky, pause and fix before more tenants. Isolation is a habit, not one setting.

FAQ

1. Per-tenant functions

Tenant Isolation Mode gives per-tenant isolation inside one function. You avoid hundreds of deploy units, reduce fleet cold starts, and keep shared code. IAM, tags, and runtime context enforce the walls.

2. Mixed isolation plans

Yes. Keep one shared path for most tenants. For premium or high-reg tenants, add more guardrails. Use dedicated DBs, separate queues, or reserved concurrency. The same isolation rules still apply. Just point them to their tagged resources.

3. Prevent cross-tenant reads

Don’t trust code for isolation. Push isolation into IAM and tags. Make tenantId required input and build ARNs from that ID. If code points wrong, IAM denies the call. Add negative tests and sims to catch slips.

4. Client-side S3 transfers

Use short-lived creds via STS and scope them to tenant prefixes and tags. Clients upload direct to s3://…/tenantId=XYZ/ without your backend proxying bytes. IAM and tags enforce the walls.

5. Compliance support

Yes. You can show role split, least privilege, tag-based access, per-tenant logs and metrics, and deny-by-default. Pair with process controls to make auditors happy.

6. Calculate per-tenant cost

Tag metrics with tenantId and roll up Lambda duration, S3 use, DynamoDB RCU and WCU, and data transfer. Since resources are tagged, use cost allocation tags to break spend by tenant in Cost Explorer.

7. Missing tag handling

Your pipeline should block it. Use pre-deploy checks that fail on missing TenantId. At runtime, policies should deny untagged access by default. Add a periodic scan that finds untagged stuff and auto-quarantines it.

8. Cross-account setup

Yes, if you want stronger blast radius walls. Put tenant data in per-tenant accounts or groups per account. Use role assume with session tags to carry TenantId. You still keep one codebase with account guardrails.

9. Fast tenant onboarding

Automate it. A small “tenant factory” creates the IAM role with TenantId. It provisions S3 prefixes, seeds DynamoDB parts, and registers dashboards. The factory logs every step with tenantId for clean audits.

Your Launch Plan

- Define a TenantId tag standard and bake it into CI/CD checks.

- Enable Tenant Isolation Mode on your Lambda function(s).

- Create per-tenant IAM roles; add PrincipalTag/TenantId.

- Write ABAC policies that only allow resources with matching TenantId tags.

- Tag S3 objects, DynamoDB items or parts, queues, topics, and log groups.

- Inject tenantId into runtime context; build resource ARNs on the fly.

- Instrument logs, metrics, and traces with tenantId.

- Add negative tests for cross-tenant access and untagged resources.

- Set concurrency and throttling guardrails to prevent noisy neighbors.

- Roll out slowly; watch per-tenant error and latency dashboards.

Here’s a rollout rhythm that works: week 1, set tag standards and write ABAC policies. Week 2, enable the mode on a low-risk function and wire runtime context. Week 3, add telemetry and build per-tenant dashboards. Week 4, run blast drills and expand to core flows. Keep lights green for a week, then migrate the rest.

You don’t need a separate architecture per customer. You need a clean isolation line with receipts.

References

- AWS Lambda Developer Guide

- AWS Identity and Access Management (ABAC)

- IAM tags on principals and resources

- AWS Resource Tagging

- AWS Organizations Tag Policies

- IAM Access Analyzer policy validation

- AWS Config Conformance Packs

- Amazon S3 ABAC and object tagging

- Amazon S3 condition keys (ExistingObjectTag)

- DynamoDB IAM policy conditions (LeadingKeys)

- AWS Security Token Service (STS)

- Amazon Cognito Identity Pools

- AWS X-Ray Developer Guide

- CloudWatch Embedded Metric Format

- AWS Cost Allocation Tags

- AWS Well-Architected SaaS Lens

- Lambda Provisioned Concurrency

- Lambda SnapStart for Java

- API Gateway request throttling

- Serverless patterns (reference implementations)