Build faster on Bedrock with Opus 4.6, structured outputs

If you’re still wrestling flaky AI outputs or hoping your code-gen agent won’t drift, here’s the wake-up. Amazon Bedrock just leveled up.

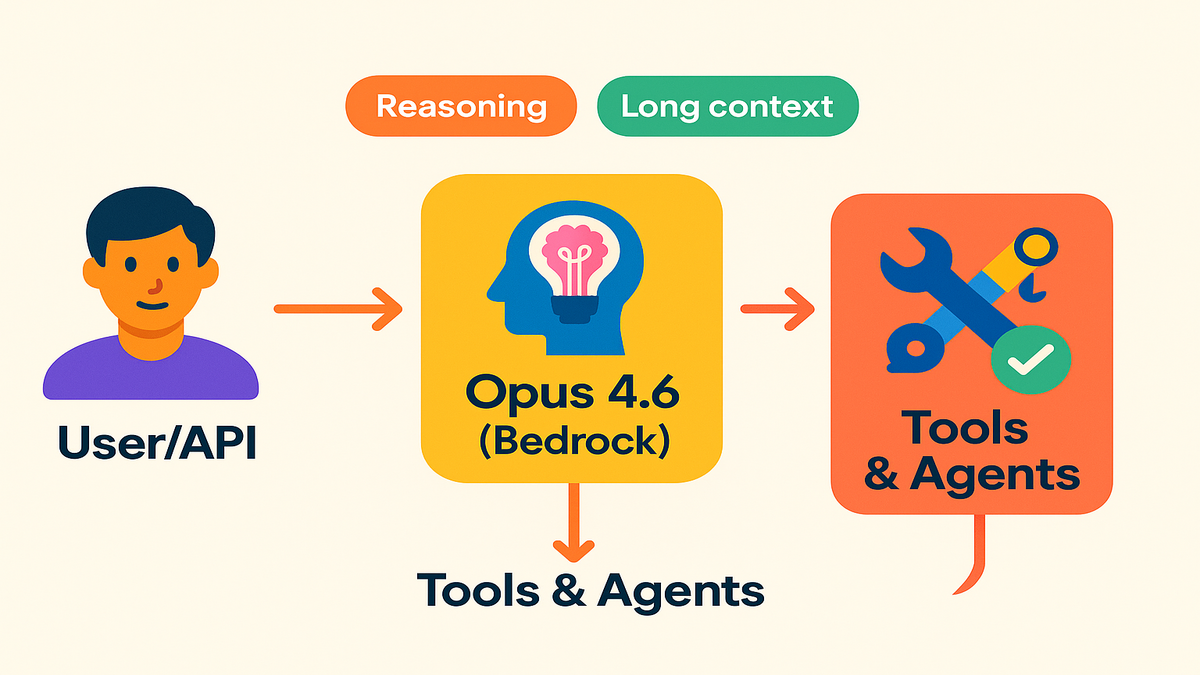

Anthropic’s Claude Opus 4.6 is now on Bedrock with stronger reasoning, sturdier long context, and sharper code generation. Translation: you can finally trust an AI pair‑programmer to handle multi-step, agentic tasks without babysitting.

Paired with Structured Outputs (JSON schemas the model actually follows), this update ends your post‑processing purgatory. No more brittle regex. No more half‑baked formats. Just clean, schema‑validated responses flowing straight into your APIs, ETL pipelines, and enterprise agents.

This is the part where your AI roadmap flips from “cool demo” to “production‑grade”.

Hot take: The fastest way to ship AI isn’t new models. It’s cutting the glue code between them.

If you build on AWS, this combo is a big unlock. Bedrock gives you managed models, enterprise guardrails, and tight hooks into the rest of your stack. Opus 4.6 sharpens the brain. Structured Outputs clean up the mouth. Together, they make your agents coherent and your pipelines boring—in a good way.

Who wins? Teams drowning in flaky integrations, long code diffs, and risky copy‑paste steps. If your backlog is stuffed with “add safety,” “trim latency,” and “please stop breaking the JSON,” this update lets you ship faster with fewer oops moments. Keep reading for the playbook.

TLDR

- Claude Opus 4.6 on Amazon Bedrock upgrades reasoning, long-context, and coding—perfect for agentic tasks.

- Structured Outputs enforce JSON schemas, slashing post-processing and integration bugs.

- Use Bedrock agents, knowledge bases, and the Converse API for composable, multi-step workflows.

- Focus on latency budgets, safety guardrails, and clear observability from day one.

- Track aws bedrock news via official release notes; DeepSeek isn’t on Bedrock (yet).

Meet Opus 4.6

Why it matters for builders

You’re shipping software, not science experiments. Opus 4.6 on Amazon Bedrock gives you a sturdier backbone for complex coding and multi‑step reasoning. The big win: accuracy. Vendor benchmarks point to leadership in long context and coding reliability vs. prior models, which means your AI pair‑programmer is more likely to stay on task across bigger codebases and longer traces.

In practice, that looks like fewer hallucinated imports, more consistent function signatures, and cleaner diffs on refactors. If you’re building a dev assistant, security bot, or data migration helper, Opus 4.6 widens the set of tasks you can offload without round‑tripping prompts 10 times.

You’ll notice practical upgrades day one:

- It keeps track of entities and variables across longer conversations, so your thread doesn’t collapse after step 4.

- It follows API contracts more faithfully, so tool calls are less hand‑wavy and more executable.

- It’s better at incremental edits (add a flag, don’t rewrite the world), which means fewer scary diffs and easier code reviews.

- It writes tests and assertions that actually map to your functions, not imaginary methods.

This matters when you go from “ask a question” to “plan, act, and verify.” The more steps you chain, the more reasoning drift hurts. Opus 4.6 tightens that loop.

Agentic workflows unlocked

The model’s improved agentic performance matters when you chain tools. Think: a release engineer agent that reads a change request, proposes a plan, writes infra code, runs checks, and opens a PR—while explaining each step. With Opus 4.6 in Bedrock, you can:

- Orchestrate tool use via agents and the Converse API.

- Feed longer traces and design docs without truncating critical context.

- Keep reasoning coherent across steps (proposal → code → tests → rollout).

First‑hand example: a CI assistant that reads a 1k‑line Terraform module, flags drift, proposes a patch, validates with a dry run, and writes a PR with justification. Before, you’d fight context limits and brittle reasoning. With Opus 4.6, the flow holds together—and your human reviewers get clearer diffs, faster.

To make this sing in production:

- Use a plan‑act‑verify loop. Ask for a plan (structured), run tools, then request a short verification before committing actions.

- Pass state as typed objects between steps. Don’t rely on free‑form summaries; your future self will curse you.

- Include idempotency keys and safe retries for tool steps (e.g., running tests, creating PRs) so the agent can recover without double‑posting.

- Use short, stable headers (APIs, constraints, SLAs) as pinned context; retrieve the rest on demand.

- Keep a lightweight memory store for the agent (e.g., DynamoDB items keyed by task_id) so steps can resume after timeouts or cold starts.

Structured Outputs

What it is

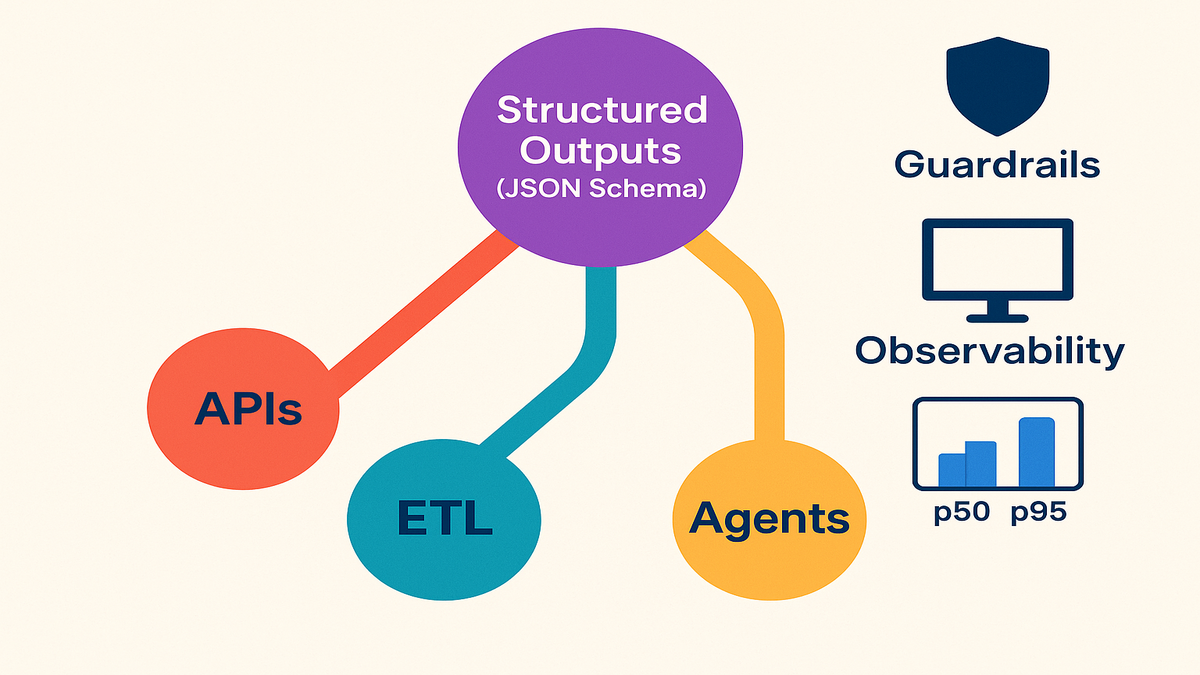

Structured Outputs in Amazon Bedrock let you define a JSON schema, and the model adheres to it. No more “close enough” objects. You specify the fields, types, enums, and nesting; the model returns compliant JSON. That means your downstream code can parse deterministically, run validations, and ship without duct‑taping regex onto prompts.

This is huge for APIs, RAG pipelines, and agents. Your agent can reason freely, but its final response shows up as clean, typed data. Fewer 2 a.m. on‑calls because the “status” field sometimes says ok‑ish.

A few schema tips that pay off immediately:

- Mark critical fields as required. Keep optional fields actually optional—don’t sneak in must‑haves as nullable strings.

- Use enums for decision points (approve|reject|needs_review) so dashboards and policies are easy to build.

- Add format hints (date-time, uri, email) and constraints (minItems, maxLength) to tighten outputs.

- Include a version and a trace_id on every response to track drift and enable safe migrations.

Where it pays off

Three high‑leverage spots:

- APIs: enforce request/response contracts and backward compatibility. Easy versioning via schema updates.

- ETL: parse unstructured docs into structured tables (think invoices → line items) with consistent typing.

- Agents: standardize tool inputs/outputs so steps chain without glue‑gun fixes.

A line from Anthropic’s docs sums it up: with structured outputs, Claude returns valid JSON matching your schema. In Bedrock, that eliminates a whole class of production bugs.

Example: a compliance agent extracts PII fields from PDFs. You define a schema with name, dateofbirth (YYYY‑MM‑DD), idtype (enum), and redactioncoordinates (array of bounding boxes). The agent’s extraction either validates or fails fast. You log the failure, retry gracefully, and keep your pipelines sane.

For governance, pair Structured Outputs with Bedrock guardrails. You can enforce safety filters and domain policies while still getting machine‑parseable results.

Want a quick rollout plan for structured outputs?

- Start with the output you want to plug into your system, then write the schema to match it—not the other way around.

- Build a tiny validator (jsonschema works great) in your service. Reject or retry anything that doesn’t validate.

- Add a “reason” field when you need explainability. This keeps reasoning separate from the typed decision.

- Log both the raw model text and the parsed object (with redactions). You’ll thank yourself during audits.

Quick pulse

- Opus 4.6 boosts reasoning and code reliability for agentic tasks on Bedrock.

- Structured Outputs kill brittle parsing by enforcing JSON schemas.

- Composability is the move: agents, tools, and knowledge bases click together.

- Production isn’t just prompts: latency, guardrails, and observability matter.

- Watch aws bedrock release notes for regional rollouts and pricing tweaks.

The theme: less babysitting, more building. Strong planning plus strict schemas equals fewer edge‑case fires.

Composable agents

Reference architecture

Here’s a resilient pattern you can ship on Bedrock:

- Front door: An API (API Gateway or AppSync) feeding user intents into a coordinator service.

- Reasoning core: Opus 4.6 via the Bedrock Converse API for planning and structured decisions.

- Tooling: Bedrock Agents to orchestrate multi‑step tasks; integrate with Lambda for custom tools.

- Knowledge: Bedrock Knowledge Bases for retrieval over docs, code, and SOPs.

- Workflow: Step Functions to coordinate long‑running tasks and retries.

- Events: Amazon EventBridge for async triggers and cross‑service fan‑out.

- Storage: DynamoDB/S3 for state and artifacts; CodeCommit/GitHub for PRs.

- Observability: CloudWatch logs/metrics + X‑Ray traces; CloudTrail for audit.

Each hop passes typed payloads (Structured Outputs) so you avoid format drift. When you add a new tool—say, a static analyzer—you just define its input/output schema and plug it in.

How this holds up under load:

- API Gateway or AppSync throttles and authenticates requests before they ever hit your reasoning loop.

- Step Functions adds retries with jitter and compensating steps for rollbacks.

- EventBridge decouples slow tools from fast decisions, so one flaky integration doesn’t block the queue.

- CloudWatch and X‑Ray make it obvious which step is the bottleneck, not just “the AI is slow.”

Real world example

Picture a release ops agent for a fintech team:

- Intake: Reads a Jira ticket and links to a design doc.

- Plan: Generates a rollout plan with risk scoring.

- Code: Proposes infrastructure changes (IaC), with diffs.

- Validate: Runs static checks and dry‑runs.

- Governance: Routes to an approval step if risk > threshold.

- Execute: Applies changes and posts logs.

- Report: Summarizes outcomes; opens incident tasks if something trips.

This isn’t sci‑fi. Bedrock gives you the model, orchestration, and retrieval. Opus 4.6 keeps reasoning coherent across steps; Structured Outputs guarantee every step speaks the same typed language. You get fewer brittle adapters and more leverage from day one.

To make it production‑grade:

- Define clear risk thresholds (enum + numeric rationale) and send high‑risk plans to human review.

- Store every plan, diff, and validation result with a trace_id. Later, you can replay or explain any decision.

- Run validators (lint, policy as code, unit tests) as separate tools with crisp schemas. The agent consumes pass/fail + findings.

- Keep write actions behind a feature flag or change window. A safe agent is a scheduled agent.

Cost latency safety

Latency and throughput tips

Agentic workflows can sprawl. Keep a latency budget:

- Token discipline: Summarize long threads; pin stable context (requirements, APIs) as short hints. Use retrieval for the rest.

- Step splitting: Break big tasks into smaller calls; parallelize tool steps where safe.

- Response size: Constrain outputs with schemas to avoid verbose rambles.

- Caching: Memoize deterministic sub‑results (schema validations, compiled prompts, embeddings).

- Observability: Trace each step’s timing in CloudWatch; alert on p95 spikes.

Also, watch concurrency and quotas. If you plan bursts (e.g., nightly code scans), spread them with EventBridge schedules or batch windows. Push heavy compute (large diffs, test runs) to tools, not the model loop.

To trim costs without hurting quality:

- Right‑size the context. Don’t feed entire repos; retrieve the 3 files that matter.

- Reuse compiled prompts/templates. Avoid regenerating boilerplate.

- Prefer short “check” calls (validate‑only) over full rewrites.

Governance and safety

Production AI isn’t just code; it’s compliance. Use Guardrails for Amazon Bedrock to enforce safety filters and content controls. Add domain policies (e.g., prevent secrets in outputs), and validate every final response against your JSON schema before action.

Audit everything. Log prompt/response metadata (hashed or redacted as needed) to CloudTrail. For PII, route through a de‑identification tool and confirm with structured validations. Tighten access with IAM boundaries for tools that can write to prod.

Finally, embrace progressive disclosure: migrate low‑risk use cases first (read‑only analyzers), then gated writers (PR‑only), then automated executors with kill‑switches. Your on‑call team will thank you.

Observability checklist that saves weekends:

- Emit a trace_id into every model response and thread it through tools.

- Log schema validation outcomes (pass/fail + reason) for quick triage.

- Track retry counts and reasons; too many “schema_mismatch” events = tighten prompts or adjust schema.

- Build a dashboard for p50/p95 latency per step. Shave the slowest, not the average.

FAQs

What is Amazon Bedrock

Amazon Bedrock is AWS’s fully managed service to access foundation models (from providers like Anthropic) through a single, secure API. You get orchestration features (agents, knowledge bases), safety guardrails, and enterprise‑grade governance. It’s the quickest on‑ramp to production‑grade AWS AI without hosting models yourself.

Is Opus 4.6 available

Availability varies by region and can change. Check the official aws bedrock release notes and the Bedrock console for the latest on supported regions and pricing before you commit a rollout.

How Structured Outputs work

You define a JSON schema for the model’s response. The model returns data that validates against that schema—fields, types, enums included. This keeps your pipelines deterministic and cuts post‑processing. If validation fails, you can retry or route to a human‑in‑the‑loop.

Is DeepSeek in Amazon Bedrock

Not at this time. Bedrock supports multiple providers (including Anthropic, Meta, Mistral, and others), but DeepSeek isn’t listed. If this changes, it’ll show up in aws bedrock news and the release notes.

Compare open-source stack

Open‑source models give you control but add ops and integration tax: hosting, scaling, safety controls, and constant patching. Bedrock centralizes those concerns, adds governance and structured outputs, and speeds up time‑to‑value. If you have heavy customization or strict data residency needs, you can hybridize: Bedrock for orchestration + in‑house models for specific steps.

Find Bedrock features

Bookmark the Bedrock release notes and the main product page. AWS’s “What’s New” feed and the console change logs are your fastest sources for weekly updates.

Handle failures and retries

Fail fast and loud. Validate every response against your schema, log the reason on failure, and retry with a tighter instruction or a smaller context. If it still fails, hand off to a human with the raw text and the intended schema attached. Keep retry counts low and idempotent.

Data privacy with Bedrock

AWS provides security features like encryption in transit and at rest, IAM controls, and auditing. According to AWS docs, Bedrock is designed with enterprise data privacy in mind. Review the Bedrock security documentation and your organization’s policies before moving sensitive workflows to production.

Bedrock playbook

- Pick a narrow, high‑value task (e.g., PR summarization + risk tagging).

- Define a strict JSON schema for the output; include enums for decision fields.

- Wire Opus 4.6 via the Converse API; request Structured Outputs.

- Add a simple tool (repo read, test runner) behind a safe IAM role.

- Log and validate every model output; retry on schema fail.

- Measure p50/p95 latency; trim prompts and parallelize where possible.

- Gate writes behind human approval; expand autonomy as metrics stabilize.

Wrap it with CloudWatch dashboards and a kill‑switch. Ship, learn, iterate.

Want to see how teams turn prototypes into production? Browse our Case Studies.

You don’t need a 40‑page AI strategy to win here. You need one reliable, narrow workflow that compounds. Opus 4.6 gives you the reasoning. Structured Outputs give you the contracts. Bedrock gives you the rails. Put them together and your “AI project” becomes a product with SLAs, not just a demo with vibes.

Next step: pick a boring, costly workflow. Schema it. Agent it. Measure it. Repeat. That’s how you stack wins without stacking incidents.

Ready to operationalize structured outputs, observability, and agentic orchestration? Explore our Features.

If glue code were a startup, it’d be a unicorn. Structured outputs are how you short that market.

References

- Amazon Bedrock product overview: https://aws.amazon.com/bedrock/

- Amazon Bedrock release notes: https://docs.aws.amazon.com/bedrock/latest/userguide/release-notes.html

- Converse API for Amazon Bedrock: https://docs.aws.amazon.com/bedrock/latest/userguide/converse-api.html

- Agents for Amazon Bedrock: https://docs.aws.amazon.com/bedrock/latest/userguide/agents.html

- Amazon Bedrock Knowledge Bases: https://docs.aws.amazon.com/bedrock/latest/userguide/knowledge-bases.html

- Guardrails for Amazon Bedrock: https://docs.aws.amazon.com/bedrock/latest/userguide/guardrails.html

- Security in Amazon Bedrock: https://docs.aws.amazon.com/bedrock/latest/userguide/security.html

- JSON Schema official: https://json-schema.org/

- Anthropic Structured Outputs (JSON Schema): https://docs.anthropic.com/claude/docs/structured-outputs

- Amazon API Gateway: https://aws.amazon.com/api-gateway/

- AWS AppSync: https://aws.amazon.com/appsync/

- AWS Step Functions: https://aws.amazon.com/step-functions/

- Amazon EventBridge: https://aws.amazon.com/eventbridge/

- Amazon CloudWatch: https://aws.amazon.com/cloudwatch/

- AWS X-Ray: https://aws.amazon.com/xray/

- AWS CloudTrail: https://aws.amazon.com/cloudtrail/

- AWS Identity and Access Management (IAM): https://aws.amazon.com/iam/

- Amazon Bedrock pricing: https://aws.amazon.com/bedrock/pricing/

- AWS What’s New: https://aws.amazon.com/new/